Theses in Robotics: Difference between revisions

No edit summary |

No edit summary |

||

| Line 22: | Line 22: | ||

== Building large-scale robotics simulation environments == | == Building large-scale robotics simulation environments == | ||

[[Image:Gazebo.png|left|100px|Gazebo]] | |||

Developing large area simulation worlds for mobile robotics. In order to develop robot navigation algorithms, it is more time- and cost-efficient to test robot behavior in a wide range of realistically simulated worlds. These simulated worlds include both indoor and outdoor environments. This work focuses on designing robot simulation environments using an open-source platform [http://gazebosim.org Gazebo]. | Developing large area simulation worlds for mobile robotics. In order to develop robot navigation algorithms, it is more time- and cost-efficient to test robot behavior in a wide range of realistically simulated worlds. These simulated worlds include both indoor and outdoor environments. This work focuses on designing robot simulation environments using an open-source platform [http://gazebosim.org Gazebo]. | ||

Revision as of 15:03, 16 December 2016

Projects in Robotics

The main objective of the follwing projects, is to give students experience in working with advanced robotics tehcnology. Our group is active in several R&D projects involving human-robot collaboration, intuitive teleoperation of robots, and autonomous navigation of unmanned mobile platforms. Our main software platforms are Robotic Operating System (ROS) for developing software for advanced robot systems and Gazebo for running realistic robotic simulations.

Development of demonstrative and promotional applications for KUKA youBot

The goal of this project is to develop promotional use cases for KUKA youBot, that demonstrate the capabilities of modern robotics and inspire people to get involved with it. The list of possible robotic demos include:

- using 3D vision for human and/or environment detection,

- interactive navigation,

- autonomous path planning,

- different pick-and-place applications,

- and human-robot collaboration.

ROS drivers for open-source mobile robot

The goal of the project is to develop Robotic Operating System (ROS) wrapper functions for drivers of an open-source mobile robot platform, that has been successfully used in Robotex competitions. The outcome of this work will give a lot of educational uses and rapid prototyping opportunities for the platform.

Detecting features of urban and off-road surroundings

Accurate navigation of self-driving unmanned robotic platforms requires identification of traversable terrain. A combined analysis of point-cloud data with RGB information of the robot's environment can help autonomous systems make correct decisions. The goal of this work is to develop algorithms for terrain classification.

Building large-scale robotics simulation environments

Developing large area simulation worlds for mobile robotics. In order to develop robot navigation algorithms, it is more time- and cost-efficient to test robot behavior in a wide range of realistically simulated worlds. These simulated worlds include both indoor and outdoor environments. This work focuses on designing robot simulation environments using an open-source platform Gazebo.

Follow-the-leader robotic demo

The idea is to create a robotic demonstration where a mobile robot is using Kinect or similar depth-camera for identifying a person and then starts following that person. The project will be implemented using Robot Operating System (ROS) on either KUKA youbot or similar mobile robot platform.

Detecting hand signals for intuitive human-robot interface

This project involves creating ROS libraries for using either a Leap Motion Controller or an RGB-D camera to detect most common human hand signals (e.g., thumbs up, thumbs down, all clear, pointing into distance, inviting).

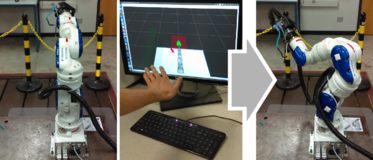

Integrating virtual reality to intuitive teleoperation system

Adding virtual reality capability to a gesture- and natural-language-based robot teleoperation system.

Kruusamäe et al. (2016) High-precision telerobot with human-centered variable perspective and scalable gestural interface