Theses in Robotics

Projects in Advanced Robotics

The main objective of the follwing projects is to give students experience in working with advanced robotics tehcnology. Our group is active in several R&D projects involving human-robot collaboration, intuitive teleoperation of robots, and autonomous navigation of unmanned mobile platforms. Our main software platforms are Robot Operating System (ROS) for developing software for advanced robot systems and Gazebo for running realistic robotic simulations.

For further information, contact Karl Kruusamäe

The following is not an exhaustive list of all available thesis/research topics.

Development of demonstrative and promotional applications for KUKA youBot

The goal of this project is to develop promotional use cases for KUKA youBot, that demonstrate the capabilities of modern robotics and inspire people to get involved with it. The list of possible robotic demos include:

- using 3D vision for human and/or environment detection,

- interactive navigation,

- autonomous path planning,

- different pick-and-place applications,

- and human-robot collaboration.

Development of demonstrative and promotional applications for Universal Robots UR5

Sample demonstrations include:

- autonomous pick-and-place,

- load-assistance for human-robot collaboration,

- packaging,

- physical compliance during human-robot interaction,

- tracing objects surface during scanning,

- robotic kitting,

- grinding of non-flat surfaces.

ROS support and educational materials for open-source mobile robot

The goal of the project is to develop Robot Operating System (ROS) wrapper functions for drivers of an open-source mobile robot platform, that has been successfully used in Robotex competitions. Additionally, educational materials must be developed to integrate the robot platform into ROS ecosystem. The outcome of this work will give a lot of educational uses and rapid prototyping opportunities for the platform.

Detecting features of urban and off-road surroundings

Accurate navigation of self-driving unmanned robotic platforms requires identification of traversable terrain. A combined analysis of point-cloud data with RGB information of the robot's environment can help autonomous systems make correct decisions. The goal of this work is to develop algorithms for terrain classification.

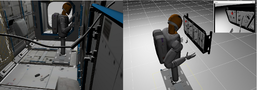

Robotic simulations (in Gazebo)

1) Developing large area simulation worlds for mobile robotics. In order to develop robot navigation algorithms, it is more time- and cost-efficient to test robot behavior in a wide range of realistically simulated worlds. These simulated worlds include both indoor and outdoor environments. This work focuses on designing robot simulation environments using an open-source platform Gazebo.

2) Humans in Gazebo. Integrating walking and gesturing humans to Gazebo.

3) Tracked Robots in Gazebo. Creating and testing tracked robotis in Gaxebo and testing different track configurations.

Follow-the-leader robotic demo

The idea is to create a robotic demonstration where a mobile robot is using Kinect or similar depth-camera for identifying a person and then starts following that person. The project will be implemented using Robot Operating System (ROS) on either KUKA youbot or similar mobile robot platform.

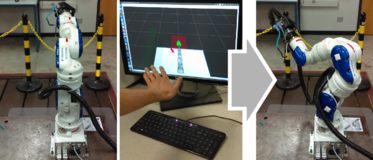

Detecting hand signals for intuitive human-robot interface

This project involves creating ROS libraries for using either a Leap Motion Controller or an RGB-D camera to detect most common human hand signals (e.g., thumbs up, thumbs down, all clear, pointing into distance, inviting).

Virtual reality user interface (VRUI) for intuitive teleoperation system

Adding virtual reality capability to a gesture- and natural-language-based robot teleoperation system.

Kruusamäe et al. (2016) High-precision telerobot with human-centered variable perspective and scalable gestural interface

Health monitor for intuitive telerobot

Intelligent status and error handling for an intuitive telerobotic system.

Dynamic stitching for achieveing 360° FOV

Automated image stitching of images from multiple camera sources for achieveing 360° field-of-view during mobile telerobotic inspection of remote areas.

3D scanning of industrial objects

Using laser sensors and cameras to create accurate models of inustrial producst for quality control or further processing.

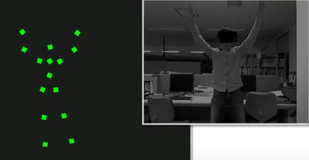

Modeling humans for human-robot interaction

True human-robot collaboration means that the robot must understand the actions, intention, and state of its human partner. This work invovlves using cameras and other human sensors for digitally representing and modelling humans. There are multiple stages for modeling: a) physical models of human kinematics and dynamics; b) higher level-models for recognizing human intent.

ROS wrapper for Estonian Speech Synthesizer

Creating a ROS package that enables robots to speak in Estonian. The basis of the work is the existing Estonian language speech synthesizer that needs to be integrated with ROS sound_play package or a stand-alone ROS wrapper package.

Robotic avatar for telepresence

Integrating hand gestures and head movements to control a robot avatar in virtual reality user interface.

ROS driver for Artificial Muscle actuators

Desigining a controller box and writing software for interfacing artificial muscle actuators [1, 2] ROS.

Upgrading KUKA youBot

KUKA youBot is omnidirectionl mobile manipulator platform. The project involves replacing the current onboard computer with a more capable one, installing newer version of Ubuntu Linux and ROS, testing core capabilities, and creating documentation for the robot.

Development of strategies for inter-robot knowledge representation

An inseparable part of making robots work together is to enable them to share knowledge about surrounding environment and robot's intention. This project focuses on developing and testing the methodologies for inter-robot knowledge representation. This project is developed as a subsystem of TeMoto.

TeMoto Based Smart Home Control

The project involves designing a open-source ROS+[https://utnuclearroboticspublic.github.io/temoto2/index.html TeMoto based scalable smart home controller.

Detection of hardware and software resources for smart integration of robots

Vast majority of today’s robotic applications rely on hard-coded device and algorithm usage. This project focuses on developing a Resource Snooper software, that can detect addition or removal of resources for the benefit of dynamic reconfiguration of robotic systems. This project is developed as a subsystem of TeMoto.

Completed projects

- Kristo Allaje, Draiveripakett käte jälgimise seadme Leap Motion™ kontroller kasutamiseks robootika arendusplatvormil ROS, BS thesis, 2018

- Martin Maidla, Avatud robotiarendusplatvormi Robotont omniliikumise ja odomeetria arendamine, BS thesis, 2018

- Raid Vellerind, Avatud robotiarendusplatvormi ROS võimekuse loomine Tartu Ülikooli Robotexi robootikaplatvormile, BS thesis, 2017