Theses in Robotics: Difference between revisions

| Line 25: | Line 25: | ||

[[Image:Ur5 left.png|200px|thumb|left|Universal Robots UR5]] | [[Image:Ur5 left.png|200px|thumb|left|Universal Robots UR5]] | ||

<hr> | |||

=== Development of demonstrative and promotional applications for Universal Robots UR5 === | === Development of demonstrative and promotional applications for Universal Robots UR5 === | ||

Sample demonstrations include: | Sample demonstrations include: | ||

| Line 41: | Line 41: | ||

*multi-robot mapping, | *multi-robot mapping, | ||

*autonomous driving, | *autonomous driving, | ||

<hr> | |||

=== ROS support, demos, and educational materials for open-source mobile robot ROBOTONT === | === ROS support, demos, and educational materials for open-source mobile robot ROBOTONT === | ||

[[Image:RosLarge.png|left|100px|ROS]] | [[Image:RosLarge.png|left|100px|ROS]] | ||

The project involves many potential theses topic on open-source robot platform ROBOTONT. The nature of the thesis can be software development to improve the platform's capabilites, simulation of specific scenarios, and/or demonstration of ROBOTONT in real-life setting. A more detailed thesis topic will be outlined during in-person meeting<br><br> | The project involves many potential theses topic on open-source robot platform ROBOTONT. The nature of the thesis can be software development to improve the platform's capabilites, simulation of specific scenarios, and/or demonstration of ROBOTONT in real-life setting. A more detailed thesis topic will be outlined during in-person meeting<br><br> | ||

[[Image:Ros_equation.png|x100px|What is ROS?]] | [[Image:Ros_equation.png|x100px|What is ROS?]] | ||

<hr> | |||

=== Detecting features of urban and off-road surroundings === | === Detecting features of urban and off-road surroundings === | ||

Accurate navigation of self-driving unmanned robotic platforms requires identification of traversable terrain. A combined analysis of point-cloud data with RGB information of the robot's environment can help autonomous systems make correct decisions. The goal of this work is to develop algorithms for terrain classification. | Accurate navigation of self-driving unmanned robotic platforms requires identification of traversable terrain. A combined analysis of point-cloud data with RGB information of the robot's environment can help autonomous systems make correct decisions. The goal of this work is to develop algorithms for terrain classification. | ||

<br> | <br> | ||

[[Image:Rtab-map.png|x90px|Mapping]] | [[Image:Rtab-map.png|x90px|Mapping]] | ||

<hr> | |||

=== Robotic simulations (in Gazebo) === | === Robotic simulations (in Gazebo) === | ||

1) Developing large area simulation worlds for mobile robotics. In order to develop robot navigation algorithms, it is more time- and cost-efficient to test robot behavior in a wide range of realistically simulated worlds. These simulated worlds include both indoor and outdoor environments. This work focuses on designing robot simulation environments using an open-source platform [http://gazebosim.org Gazebo].<br> | 1) Developing large area simulation worlds for mobile robotics. In order to develop robot navigation algorithms, it is more time- and cost-efficient to test robot behavior in a wide range of realistically simulated worlds. These simulated worlds include both indoor and outdoor environments. This work focuses on designing robot simulation environments using an open-source platform [http://gazebosim.org Gazebo].<br> | ||

| Line 60: | Line 60: | ||

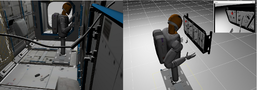

[[Image:Gazebo.png|x90px|Gazebo]] [[Image:Robonaut-2-simulator.png|x90px|NASA Robonaut simulation in Gazebo]] | [[Image:Gazebo.png|x90px|Gazebo]] [[Image:Robonaut-2-simulator.png|x90px|NASA Robonaut simulation in Gazebo]] | ||

<br> | <br> | ||

<hr> | |||

=== Follow-the-leader robotic demo === | === Follow-the-leader robotic demo === | ||

[[Image:LeapMotion.png|200px|thumb|right|Detecting 2 hands with Leap Motion Controller]] | [[Image:LeapMotion.png|200px|thumb|right|Detecting 2 hands with Leap Motion Controller]] | ||

The idea is to create a robotic demonstration where a mobile robot is using Kinect or similar depth-camera for identifying a person and then starts following that person. The project will be implemented using Robot Operating System (ROS) on either KUKA youbot or similar mobile robot platform. | The idea is to create a robotic demonstration where a mobile robot is using Kinect or similar depth-camera for identifying a person and then starts following that person. The project will be implemented using Robot Operating System (ROS) on either KUKA youbot or similar mobile robot platform. | ||

<hr> | |||

=== Detecting hand signals for intuitive human-robot interface === | === Detecting hand signals for intuitive human-robot interface === | ||

This project involves creating ROS libraries for using either a [https://www.leapmotion.com/ Leap Motion Controller] or an [http://www.intel.com/content/www/us/en/architecture-and-technology/realsense-overview.html RGB-D camera] to detect most common human hand signals (e.g., thumbs up, thumbs down, all clear, pointing into distance, inviting). | This project involves creating ROS libraries for using either a [https://www.leapmotion.com/ Leap Motion Controller] or an [http://www.intel.com/content/www/us/en/architecture-and-technology/realsense-overview.html RGB-D camera] to detect most common human hand signals (e.g., thumbs up, thumbs down, all clear, pointing into distance, inviting). | ||

<hr> | |||

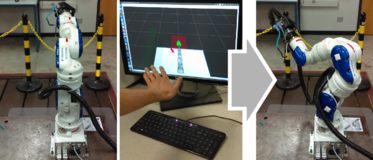

=== Virtual reality user interface (VRUI) for intuitive teleoperation system === | === Virtual reality user interface (VRUI) for intuitive teleoperation system === | ||

Enhancing the user-experience of a virtual reality UI developed by [https://github.com/ut-ims-robotics/vrui_rviz Georg Astok]. Potentially adding [http://www.osvr.org/hardware-devs.html virtual reality capability] to a [https://www.youtube.com/watch?v=L25HHFd00rc gesture- and natural-language-based robot teleoperation system]. | Enhancing the user-experience of a virtual reality UI developed by [https://github.com/ut-ims-robotics/vrui_rviz Georg Astok]. Potentially adding [http://www.osvr.org/hardware-devs.html virtual reality capability] to a [https://www.youtube.com/watch?v=L25HHFd00rc gesture- and natural-language-based robot teleoperation system]. | ||

<br> | <br> | ||

[[Image:Temoto-working-principle-in-pics.png|x160px|Gesture-based teleoperation]] | [[Image:Temoto-working-principle-in-pics.png|x160px|Gesture-based teleoperation]] | ||

<hr> | |||

=== Health monitor for intuitive telerobot === | === Health monitor for intuitive telerobot === | ||

Intelligent status and error handling for an intuitive telerobotic system. | Intelligent status and error handling for an intuitive telerobotic system. | ||

<hr> | |||

=== Dynamic stitching for achieveing 360° FOV === | === Dynamic stitching for achieveing 360° FOV === | ||

Automated image stitching of images from multiple camera sources for achieveing 360° field-of-view during mobile telerobotic inspection of remote areas. | Automated image stitching of images from multiple camera sources for achieveing 360° field-of-view during mobile telerobotic inspection of remote areas. | ||

<hr> | |||

=== 3D scanning of industrial objects === | === 3D scanning of industrial objects === | ||

Using laser sensors and cameras to create accurate models of inustrial producst for quality control or further processing. | Using laser sensors and cameras to create accurate models of inustrial producst for quality control or further processing. | ||

<hr> | |||

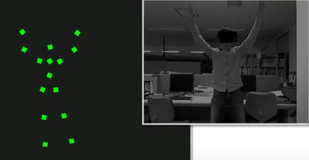

=== Modeling humans for human-robot interaction === | === Modeling humans for human-robot interaction === | ||

True human-robot collaboration means that the robot must understand the actions, intention, and state of its human partner. This work invovlves using cameras and other human sensors for digitally representing and modelling humans. There are multiple stages for modeling: a) physical models of human kinematics and dynamics; b) higher level-models for recognizing human intent.<br> | True human-robot collaboration means that the robot must understand the actions, intention, and state of its human partner. This work invovlves using cameras and other human sensors for digitally representing and modelling humans. There are multiple stages for modeling: a) physical models of human kinematics and dynamics; b) higher level-models for recognizing human intent.<br> | ||

[[Image:Skeletal ROS.PNG|x160px|ROS & Kinect & Skeleton-Markers Package]] | [[Image:Skeletal ROS.PNG|x160px|ROS & Kinect & Skeleton-Markers Package]] | ||

<hr> | |||

=== ROS wrapper for Estonian Speech Synthesizer === | === ROS wrapper for Estonian Speech Synthesizer === | ||

Creating a ROS package that enables robots to speak in Estonian. The basis of the work is the existing [https://www.eki.ee/heli/index.php?option=com_content&view=article&id=6&Itemid=465 Estonian language speech synthesizer] that needs to be integrated with ROS [http://wiki.ros.org/sound_play sound_play] package or a stand-alone ROS wrapper package. | Creating a ROS package that enables robots to speak in Estonian. The basis of the work is the existing [https://www.eki.ee/heli/index.php?option=com_content&view=article&id=6&Itemid=465 Estonian language speech synthesizer] that needs to be integrated with ROS [http://wiki.ros.org/sound_play sound_play] package or a stand-alone ROS wrapper package. | ||

<hr> | |||

=== Robotic avatar for telepresence === | === Robotic avatar for telepresence === | ||

Integrating hand gestures and head movements to control a robot avatar in virtual reality user interface. | Integrating hand gestures and head movements to control a robot avatar in virtual reality user interface. | ||

<hr> | |||

=== ROS driver for Artificial Muscle actuators === | === ROS driver for Artificial Muscle actuators === | ||

Desigining a controller box and writing software for interfacing artificial muscle actuators [{{doi-inline|10.3390/act4010017|1}}, [https://www.youtube.com/watch?v=tspg_l49hSA&index=10&list=UU186z2gc0XiLh12hNvPZdUQ 2]] ROS. | Desigining a controller box and writing software for interfacing artificial muscle actuators [{{doi-inline|10.3390/act4010017|1}}, [https://www.youtube.com/watch?v=tspg_l49hSA&index=10&list=UU186z2gc0XiLh12hNvPZdUQ 2]] ROS. | ||

<hr> | |||

=== TeMoto based smart home control === | === TeMoto based smart home control === | ||

The project involves designing a open-source ROS+[https://utnuclearroboticspublic.github.io/temoto2/index.html TeMoto] based scalable smart home controller. | The project involves designing a open-source ROS+[https://utnuclearroboticspublic.github.io/temoto2/index.html TeMoto] based scalable smart home controller. | ||

<hr> | |||

=== Detection of hardware and software resources for smart integration of robots === | === Detection of hardware and software resources for smart integration of robots === | ||

Vast majority of today’s robotic applications rely on hard-coded device and algorithm usage. This project focuses on developing a Resource Snooper software, that can detect addition or removal of resources for the benefit of dynamic reconfiguration of robotic systems. This project is developed as a subsystem of [https://utnuclearroboticspublic.github.io/temoto2/index.html TeMoto]. | Vast majority of today’s robotic applications rely on hard-coded device and algorithm usage. This project focuses on developing a Resource Snooper software, that can detect addition or removal of resources for the benefit of dynamic reconfiguration of robotic systems. This project is developed as a subsystem of [https://utnuclearroboticspublic.github.io/temoto2/index.html TeMoto]. | ||

<hr> | |||

=== Sonification of feedback during teleoperation of robots === | === Sonification of feedback during teleoperation of robots === | ||

Humans are used to receiving auditory feedback in their everyday lives. It helps us make decision and be aware of potential dangers. Telerobotic interfaces can deploy the same idea to improve the Situational Awareness and robotic task efficiency. The thesis project involves a study about different sonification solutions and implementation of it in a telerobotic application using ROS. | Humans are used to receiving auditory feedback in their everyday lives. It helps us make decision and be aware of potential dangers. Telerobotic interfaces can deploy the same idea to improve the Situational Awareness and robotic task efficiency. The thesis project involves a study about different sonification solutions and implementation of it in a telerobotic application using ROS. | ||

<hr> | |||

=== Human-Robot and Robot-Robot collaboration applications === | === Human-Robot and Robot-Robot collaboration applications === | ||

Creating a demo or analysis of software capabilities related to human-robot or tobot-robot teams | Creating a demo or analysis of software capabilities related to human-robot or tobot-robot teams | ||

| Line 111: | Line 111: | ||

**youbot+drone - a drone maps the environment (for example a maze) and ground vehicle uses this information to traverse the maze | **youbot+drone - a drone maps the environment (for example a maze) and ground vehicle uses this information to traverse the maze | ||

**youbot+clearbot - youbot cannot go up ledges but it can lift smaller robot, such as clearbot, up a ledge. | **youbot+clearbot - youbot cannot go up ledges but it can lift smaller robot, such as clearbot, up a ledge. | ||

<hr> | |||

=== Developing ROS driver for a robotic gripper === | === Developing ROS driver for a robotic gripper === | ||

The goal for this project is to develop ROS drivers for LEHF32K2-64 gripper. The work is concluded by demonstrating the functionalities of the gripper via pick-and-place task. <br> | The goal for this project is to develop ROS drivers for LEHF32K2-64 gripper. The work is concluded by demonstrating the functionalities of the gripper via pick-and-place task. <br> | ||

[[File:Smc gripper.jpg|120px|SMC LEHF32K2-64 gripper.]] | [[File:Smc gripper.jpg|120px|SMC LEHF32K2-64 gripper.]] | ||

<hr> | |||

=== Mirroring human hand movements on industrial robots === | === Mirroring human hand movements on industrial robots === | ||

The goal of this project is to integrate continuous control of industrial robot manipulator with a gestural telerobotics interface. The recommended tools for this thesis project are Leap Motion Controller or a standard web camera, Universal Robot UR5 manipulator, and ROS. | The goal of this project is to integrate continuous control of industrial robot manipulator with a gestural telerobotics interface. The recommended tools for this thesis project are Leap Motion Controller or a standard web camera, Universal Robot UR5 manipulator, and ROS. | ||

<hr> | |||

=== ROS2-based robotics demo === | === ROS2-based robotics demo === | ||

Converting ROS demos and tutorials to ROS2. | Converting ROS demos and tutorials to ROS2. | ||

<hr> | |||

=== ROS2 for robotont === | === ROS2 for robotont === | ||

Creating ROS2 support for robotont mobile platform | Creating ROS2 support for robotont mobile platform | ||

<hr> | |||

=== TeMoto for robotont === | === TeMoto for robotont === | ||

Swarm-management for robotont using [https://temoto-telerobotics.github.io TeMoto] framework. | Swarm-management for robotont using [https://temoto-telerobotics.github.io TeMoto] framework. | ||

<hr> | |||

=== 3D lidar for mobile robotics === | === 3D lidar for mobile robotics === | ||

Analysing the technical characteristics of 3D lidar.. Desinging and constructing the mount for Ouster OS-1 lidar and validating its applicability for indoor and outdoor scenarios. | Analysing the technical characteristics of 3D lidar.. Desinging and constructing the mount for Ouster OS-1 lidar and validating its applicability for indoor and outdoor scenarios. | ||

<hr> | |||

=== Making KUKA youBot user friendly again === | === Making KUKA youBot user friendly again === | ||

This thesis focuses on integrating the low-level software capabilities of KUKA youBot in order to achieve high-level commonly used functionalities such as | This thesis focuses on integrating the low-level software capabilities of KUKA youBot in order to achieve high-level commonly used functionalities such as | ||

| Line 140: | Line 140: | ||

The thesis is suitable for both, master and bachelor levels, as the associated code can be scaled up to generic "user-friendly control" package. | The thesis is suitable for both, master and bachelor levels, as the associated code can be scaled up to generic "user-friendly control" package. | ||

<hr> | |||

=== Flexible peer-to-peer network infrastructure for environments with restricted signal coverage=== | === Flexible peer-to-peer network infrastructure for environments with restricted signal coverage=== | ||

A very common issue with robotics in real world environments is that the network coverage is highly dependent on the environment. This makes the communication between the robot-to-base-station or robot-to-robot unreliable, potentially compromising the whole mission. This thesis focuses on implementing a peer-to-peer based network system on mobile robot platforms, where the platforms extend the network coverage between, e.g., an operator and a Worker robot. The work will be demonstrated in a real world setting, where common networking strategies for teleoperation (tethered or single router based) do not work. | A very common issue with robotics in real world environments is that the network coverage is highly dependent on the environment. This makes the communication between the robot-to-base-station or robot-to-robot unreliable, potentially compromising the whole mission. This thesis focuses on implementing a peer-to-peer based network system on mobile robot platforms, where the platforms extend the network coverage between, e.g., an operator and a Worker robot. The work will be demonstrated in a real world setting, where common networking strategies for teleoperation (tethered or single router based) do not work. | ||

<hr> | |||

=== Enhancing teleoperation control interface with augmented cues to provoke caution=== | === Enhancing teleoperation control interface with augmented cues to provoke caution=== | ||

The task is to create a telerobot control interface where video feed from the remote site and/or a mixed-reality scene is augmented with visual cues to provoke caution in human operator. | The task is to create a telerobot control interface where video feed from the remote site and/or a mixed-reality scene is augmented with visual cues to provoke caution in human operator. | ||

<hr> | |||

= Completed projects = | = Completed projects = | ||

Revision as of 13:49, 10 July 2019

Projects in Advanced Robotics

The main objective of the follwing projects is to give students experience in working with advanced robotics tehcnology. Our group is active in several R&D projects involving human-robot collaboration, intuitive teleoperation of robots, and autonomous navigation of unmanned mobile platforms. Our main software platforms are Robot Operating System (ROS) for developing software for advanced robot systems and Gazebo for running realistic robotic simulations.

For further information, contact Karl Kruusamäe and Arun Kumar Singh

The following is not an exhaustive list of all available thesis/research topics.

Highlighted theses topics for 2019/2020 study year

- 3D lidar for mobile robotics

- ROS wrapper for Estonian Speech Synthesizer

- Making KUKA youBot user friendly again

- Flexible peer-to-peer network infrastructure for environments with restricted signal coverage

List of protential thesis topics

Development of demonstrative and promotional applications for KUKA youBot

The goal of this project is to develop promotional use cases for KUKA youBot, that demonstrate the capabilities of modern robotics and inspire people to get involved with it. The list of possible robotic demos include:

- demonstration of motion planning algorithms for mobile manipulation,

- using 3D vision for human and/or environment detection,

- interactive navigation,

- autonomous path planning,

- different pick-and-place applications,

- and human-robot collaboration.

Development of demonstrative and promotional applications for Universal Robots UR5

Sample demonstrations include:

- autonomous pick-and-place,

- load-assistance for human-robot collaboration,

- packaging,

- physical compliance during human-robot interaction,

- tracing objects surface during scanning,

- robotic kitting,

- grinding of non-flat surfaces.

Development of demonstrative and promotional applications for Clearpath Jackal

Sample demonstrations include:

- human-robot interaction,

- multi-robot mapping,

- autonomous driving,

ROS support, demos, and educational materials for open-source mobile robot ROBOTONT

The project involves many potential theses topic on open-source robot platform ROBOTONT. The nature of the thesis can be software development to improve the platform's capabilites, simulation of specific scenarios, and/or demonstration of ROBOTONT in real-life setting. A more detailed thesis topic will be outlined during in-person meeting

Detecting features of urban and off-road surroundings

Accurate navigation of self-driving unmanned robotic platforms requires identification of traversable terrain. A combined analysis of point-cloud data with RGB information of the robot's environment can help autonomous systems make correct decisions. The goal of this work is to develop algorithms for terrain classification.

Robotic simulations (in Gazebo)

1) Developing large area simulation worlds for mobile robotics. In order to develop robot navigation algorithms, it is more time- and cost-efficient to test robot behavior in a wide range of realistically simulated worlds. These simulated worlds include both indoor and outdoor environments. This work focuses on designing robot simulation environments using an open-source platform Gazebo.

2) Humans in Gazebo. Integrating walking and gesturing humans to Gazebo.

3) Tracked Robots in Gazebo. Creating and testing tracked robotis in Gaxebo and testing different track configurations.

4) Robot basketball simulation for game strategy and shot accuracy

5) Robotont at the Institute of Technology

Follow-the-leader robotic demo

The idea is to create a robotic demonstration where a mobile robot is using Kinect or similar depth-camera for identifying a person and then starts following that person. The project will be implemented using Robot Operating System (ROS) on either KUKA youbot or similar mobile robot platform.

Detecting hand signals for intuitive human-robot interface

This project involves creating ROS libraries for using either a Leap Motion Controller or an RGB-D camera to detect most common human hand signals (e.g., thumbs up, thumbs down, all clear, pointing into distance, inviting).

Virtual reality user interface (VRUI) for intuitive teleoperation system

Enhancing the user-experience of a virtual reality UI developed by Georg Astok. Potentially adding virtual reality capability to a gesture- and natural-language-based robot teleoperation system.

Health monitor for intuitive telerobot

Intelligent status and error handling for an intuitive telerobotic system.

Dynamic stitching for achieveing 360° FOV

Automated image stitching of images from multiple camera sources for achieveing 360° field-of-view during mobile telerobotic inspection of remote areas.

3D scanning of industrial objects

Using laser sensors and cameras to create accurate models of inustrial producst for quality control or further processing.

Modeling humans for human-robot interaction

True human-robot collaboration means that the robot must understand the actions, intention, and state of its human partner. This work invovlves using cameras and other human sensors for digitally representing and modelling humans. There are multiple stages for modeling: a) physical models of human kinematics and dynamics; b) higher level-models for recognizing human intent.

ROS wrapper for Estonian Speech Synthesizer

Creating a ROS package that enables robots to speak in Estonian. The basis of the work is the existing Estonian language speech synthesizer that needs to be integrated with ROS sound_play package or a stand-alone ROS wrapper package.

Robotic avatar for telepresence

Integrating hand gestures and head movements to control a robot avatar in virtual reality user interface.

ROS driver for Artificial Muscle actuators

Desigining a controller box and writing software for interfacing artificial muscle actuators [1, 2] ROS.

TeMoto based smart home control

The project involves designing a open-source ROS+TeMoto based scalable smart home controller.

Detection of hardware and software resources for smart integration of robots

Vast majority of today’s robotic applications rely on hard-coded device and algorithm usage. This project focuses on developing a Resource Snooper software, that can detect addition or removal of resources for the benefit of dynamic reconfiguration of robotic systems. This project is developed as a subsystem of TeMoto.

Sonification of feedback during teleoperation of robots

Humans are used to receiving auditory feedback in their everyday lives. It helps us make decision and be aware of potential dangers. Telerobotic interfaces can deploy the same idea to improve the Situational Awareness and robotic task efficiency. The thesis project involves a study about different sonification solutions and implementation of it in a telerobotic application using ROS.

Human-Robot and Robot-Robot collaboration applications

Creating a demo or analysis of software capabilities related to human-robot or tobot-robot teams

- human-robot collaborative assembly

- distributed mapping; analysis and demo of existing ROS (e.g., segmap https://youtu.be/JJhEkIA1xSE) packages for multi-robot mapping

- Inaccessible region teamwork

- youbot+drone - a drone maps the environment (for example a maze) and ground vehicle uses this information to traverse the maze

- youbot+clearbot - youbot cannot go up ledges but it can lift smaller robot, such as clearbot, up a ledge.

Developing ROS driver for a robotic gripper

The goal for this project is to develop ROS drivers for LEHF32K2-64 gripper. The work is concluded by demonstrating the functionalities of the gripper via pick-and-place task.

Mirroring human hand movements on industrial robots

The goal of this project is to integrate continuous control of industrial robot manipulator with a gestural telerobotics interface. The recommended tools for this thesis project are Leap Motion Controller or a standard web camera, Universal Robot UR5 manipulator, and ROS.

ROS2-based robotics demo

Converting ROS demos and tutorials to ROS2.

ROS2 for robotont

Creating ROS2 support for robotont mobile platform

TeMoto for robotont

Swarm-management for robotont using TeMoto framework.

3D lidar for mobile robotics

Analysing the technical characteristics of 3D lidar.. Desinging and constructing the mount for Ouster OS-1 lidar and validating its applicability for indoor and outdoor scenarios.

Making KUKA youBot user friendly again

This thesis focuses on integrating the low-level software capabilities of KUKA youBot in order to achieve high-level commonly used functionalities such as

- teach mode - robot can replicate user demonstrated trajectories

- end-effector jogging

- gripper control

- gamepad integration - user can control the robot via gamepad

- web integration - user can control the robot via internet browser

The thesis is suitable for both, master and bachelor levels, as the associated code can be scaled up to generic "user-friendly control" package.

Flexible peer-to-peer network infrastructure for environments with restricted signal coverage

A very common issue with robotics in real world environments is that the network coverage is highly dependent on the environment. This makes the communication between the robot-to-base-station or robot-to-robot unreliable, potentially compromising the whole mission. This thesis focuses on implementing a peer-to-peer based network system on mobile robot platforms, where the platforms extend the network coverage between, e.g., an operator and a Worker robot. The work will be demonstrated in a real world setting, where common networking strategies for teleoperation (tethered or single router based) do not work.

Enhancing teleoperation control interface with augmented cues to provoke caution

The task is to create a telerobot control interface where video feed from the remote site and/or a mixed-reality scene is augmented with visual cues to provoke caution in human operator.

Completed projects

Masters's theses

- Madis K Nigol, Õppematerjalid robotplatvormile Robotont [Study materials for robot platform Robotont], MS thesis, 2019

- Renno Raudmäe, Avatud robotplatvorm Robotont [Open source robotics platform Robotont], MS thesis, 2019

- Asif Sattar, Human detection and distance estimation with monocular camera using YOLOv3 neural network [Inimeste tuvastamine ning kauguse hindamine kasutades kaamerat ning YOLOv3 tehisnärvivõrku], MS thesis, 2019

- Ragnar Margus, Kergliiklusvahendite jagamisteenuseks vajaliku positsioneerimismooduli loomine ja uurimine [Develoment and testing of a IoT module for electric vehicle sharing service], MS thesis, 2019

- Pavel Šumejko, Robust Solution for Extrinsic Calibration of a 2D Laser-Rangefinder and a Monocular USB Camera [Meetod 2D laserkaugusmõõdiku ja USB-kaamera väliseks kalibreerimiseks], MS thesis, 2019

- Dzvezdana Arsovska, Building an Efficient and Secure Software Supply Pipeline for Aerial Robotics Application [Efektiivne ja turvaline tarkvaraarendusahel lennurobootika rakenduses], MS thesis, 2019

- Tõnis Tiimus, Visuaalselt esilekutsutud potentsiaalidel põhinev aju-arvuti liides robootika rakendustes [A VEP-based BCI for robotics applications], MS thesis, 2018

- Martin Appo, Hardware-agnostic compliant control ROS package for collaborative industrial manipulators [Riistvarapaindlik ROSi tarkvarapakett tööstuslike robotite mööndlikuks juhtimiseks], MS thesis, 2018

- Hassan Mahmoud Shehawy Elhanash, Optical Tracking of Forearm for Classifying Fingers Poses [Küünarvarre visuaalne jälgimine ennustamaks sõrmede asendeid], MS thesis, 2018

Bachelor's theses

- Meelis Pihlap, Mitme roboti koostöö funktsionaalsuste väljatöötamine tarkvararaamistikule TeMoto [Multi-robot collaboration functionalities for robot software development framework TeMoto], BS thesis, 2019

- Kaarel Mark, Liitreaalsuse kasutamine tootmisprotsessis asukohtade määramisel [Augmented reality for location determination in manufacturing], BS thesis, 2019

- Kätriin Julle, Roboti KUKA youBot riistvara ja ROS-tarkvara uuendamine [Upgrading robot KUKA youBot’s hardware and ROS-software], BS thesis, 2019

- Georg Astok, Roboti juhtimine virtuaalreaalsuses kasutades ROS-raamistikku [Creating virtual reality user interface using only ROS framework], BS thesis, 2019

- Martin Hallist, Robotipõhine kaugkohalolu käte liigutuste ülekandmiseks [Teleoperation robot for arms motions], BS thesis, 2019

- Ahmed Hassan Helmy Mohamed, Software integration of autonomous robot system for mixing and serving drinks [Jooke valmistava ja serveeriva robotsüsteemi tarkvaralahendus], BS thesis, 2019

- Kristo Allaje, Draiveripakett käte jälgimise seadme Leap Motion™ kontroller kasutamiseks robootika arendusplatvormil ROS [Driver package for using Leap Motion™ controller on the robotics development platform ROS], BS thesis, 2018

- Martin Maidla, Avatud robotiarendusplatvormi Robotont omniliikumise ja odomeetria arendamine [Omnimotion and odometry development for open robot development platform Robotont], BS thesis, 2018

- Raid Vellerind, Avatud robotiarendusplatvormi ROS võimekuse loomine Tartu Ülikooli Robotexi robootikaplatvormile [ROS driver development for the University of Tartu’s Robotex robotics platform], BS thesis, 2017