Theses in Robotics: Difference between revisions

| (187 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

[[Category:IMS-robotics]] | [[Category:IMS-robotics]] | ||

<div class="toclimit-3">__TOC__</div> | |||

= Projects in Advanced Robotics = | = Projects in Advanced Robotics = | ||

''The main objective of the follwing projects is to give students experience in working with advanced robotics tehcnology. Our group is active in several R&D projects involving human-robot collaboration, intuitive teleoperation of robots, and autonomous navigation of unmanned mobile platforms. Our main software platforms are [http://www.ros.org/ Robot Operating System (ROS)] for developing software for advanced robot systems and [http://gazebosim.org/ Gazebo] for running realistic robotic simulations.''<br><br> | ''The main objective of the follwing projects is to give students experience in working with advanced robotics tehcnology. Our group is active in several R&D projects involving human-robot collaboration, intuitive teleoperation of robots, and autonomous navigation of unmanned mobile platforms. Our main software platforms are [http://www.ros.org/ Robot Operating System (ROS)] for developing software for advanced robot systems and [http://gazebosim.org/ Gazebo] for running realistic robotic simulations.''<br><br> | ||

For further information, contact [[User:Karl|Karl Kruusamäe]] | For further information, contact [[User:Karl|Karl Kruusamäe]]. | ||

The following is not an exhaustive list of all available thesis/research topics. | The following is not an exhaustive list of all available thesis/research topics. | ||

== Highlighted theses topics for 2025/2026 study year == | |||

# [[#Application of VLA (Vision-Language-Action) models in robotics|Application of VLA (Vision-Language-Action) models in robotics]] | |||

# [[#LLM-based task planning for robots|LLM-based task planning for robots]] | |||

# [[#ROBOTONT: analysis of different options as on-board computers|ROBOTONT: analysis of different options as on-board computers]] | |||

# [[#SemuBOT: multiple topics|SemuBOT: multiple topics]] | |||

# [[#ROBOTONT: integrating a graphical programming interface|ROBOTONT: integrating a graphical programming interface]] | |||

# [[#Sign-language-based control for robots|Sign-language-based control for robots]] | |||

# [[#Robotic Study Companion: a social robot for students in higher education|Robotic Study Companion: a social robot for students in higher education]] | |||

# [[#Quantitative Evaluation of the Situation Awareness during the Teleoperation of an Urban Vehicle|Quantitative Evaluation of the Situation Awareness during the Teleoperation of an Urban Vehicle]] | |||

== List of potential thesis topics == | |||

Our inventory includes but is not limited to: | |||

<gallery mode="packed"> | |||

File:Youbot.png|120px|thumb|KUKA youBot | |||

File:Ur5_left.png|120px|thumb|Universal Robot UR5 | |||

File:Franka_Emika_Panda.jpg|120px|thumb|Franka Emika Panda | |||

File:Clearpath_Jackal.jpg|120px|thumb|Clearpath Jackal | |||

File:Kinova_KG-3_Gripper.jpg|120px|thumb|Kinova 3-finger gripper | |||

File:Xarm7.jpg|120px|thumb|UFACTORY xArm7 | |||

File:Robotont_banner.png|120px|thumb|robotont | |||

File:Turtlebot3-waffle-pi.jpg|thumb|TurtleBot3 | |||

File:Parrot-bebop-2.jpg|thumb|Parrot Bebop 2 | |||

</gallery> | |||

<hr> | |||

=== ROBOTONT: Docker-Driven ROS Environment Switching === | |||

This thesis focuses on integrating Docker containers to manage and switch between different ROS (Robot Operating System) environments on Robotont. Currently, ROS is installed natively on Robotont's Ubuntu-based onboard computer, limiting flexibility in system recovery and configuration switching. The goal is to develop a Docker-based solution that allows users to switch between ROS environments directly from Robotont’s existing low-level menu interface. | |||

<hr> | |||

== | === ROBOTONT: designing and implementing a communication protocol for additional devices === | ||

Robotont currently includes a designated area on its front for attaching additional devices, such as an ultrasonic range finder, a servo motor connected to an Arduino, or a MikroBUS device. This thesis aims to design and implement a standard communication protocol that will enable seamless integration and control of these devices through the high-level ROS framework running on Robotont’s onboard computer. | |||

<hr> | |||

== | === ROBOTONT: analysis of different options as on-board computers === | ||

Currently ROBOTONT uses Intel NUC as an onboard computer. The goal of this thesis is to validate Robotont's software stack on alternative compute devices (e.g. Raspi4, Intel Compute Stick, and NVIDIA Jetson Nano) and benchmark their performance for the most popular Robotont use-case demos (e.g. webapp teleop, ar-tag steering, 2D mapping, and 3D mapping). The objetive is to propose at least two different compute solutions: one that optimizes cost and another that optimizes for the performance. | |||

<hr> | |||

== | === ROBOTONT Lite === | ||

The goal of this thesis is to optimize ROBOTONT platform for cost by replacing the onboard compute and sensor with low-cost alternatives but ensuring ROS/ROS2 software compatibility. | |||

The goal of | <hr> | ||

< | |||

== | === ROBOTONT: integrating a graphical programming interface === | ||

The goal of this thesis is to integrate graphical programming solution (e.g. Scratch or Blockly) to enable programming of Robotont by non-experts. E.g. https://doi.org/10.48550/arXiv.2011.13706 | |||

< | <hr> | ||

== | === SemuBOT: multiple topics === | ||

In 2023/2024 many topics are developed to support the development of open-source humanoid robot project SemuBOT (https://www.facebook.com/semubotmtu/) | |||

<hr> | |||

< | |||

== | === Robotic Study Companion: a social robot for students in higher education === | ||

Potential Topics: | |||

* Enhance the Robot's Speech/Natural Language Capabilities | |||

* Build a Local Language Model for the RSC | |||

* Develop and Program the Robot’s Behavior and Personality | |||

* Build a Digital Twin Simulation for Multimodal Interaction | |||

* Explore the Use of the RSC as an Affective Robot to Address Students’ Academic Emotions | |||

* Explore and Implement Cybersecurity Measures for Social Robot | |||

[https://github.com/orgs/RobotStudyCompanion/discussions/3 More info on Github] | reach out to farnaz.baksh@ut.ee for more info | |||

<hr> | |||

== Virtual reality user interface (VRUI) for intuitive teleoperation system == | === Virtual reality user interface (VRUI) for intuitive teleoperation system === | ||

[[Image:LeapMotion.png|200px|thumb|right|Detecting 2 hands with Leap Motion Controller]] | |||

Enhancing the user-experience of a virtual reality UI developed by [https://github.com/ut-ims-robotics/vrui_rviz Georg Astok]. Potentially adding [http://www.osvr.org/hardware-devs.html virtual reality capability] to a [https://www.youtube.com/watch?v=L25HHFd00rc gesture- and natural-language-based robot teleoperation system]. | |||

<br> | <br> | ||

[[Image:Temoto-working-principle-in-pics.png|x160px|Gesture-based teleoperation]] | [[Image:Temoto-working-principle-in-pics.png|x160px|Gesture-based teleoperation]] | ||

< | <hr> | ||

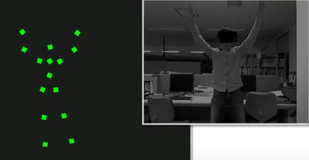

= | === Modeling humans for human-robot interaction === | ||

== Modeling humans for human-robot interaction == | |||

True human-robot collaboration means that the robot must understand the actions, intention, and state of its human partner. This work invovlves using cameras and other human sensors for digitally representing and modelling humans. There are multiple stages for modeling: a) physical models of human kinematics and dynamics; b) higher level-models for recognizing human intent.<br> | True human-robot collaboration means that the robot must understand the actions, intention, and state of its human partner. This work invovlves using cameras and other human sensors for digitally representing and modelling humans. There are multiple stages for modeling: a) physical models of human kinematics and dynamics; b) higher level-models for recognizing human intent.<br> | ||

[[Image:Skeletal ROS.PNG|x160px|ROS & Kinect & Skeleton-Markers Package]] | [[Image:Skeletal ROS.PNG|x160px|ROS & Kinect & Skeleton-Markers Package]] | ||

<hr> | |||

= | === Robotic avatar for telepresence === | ||

== Robotic avatar for telepresence == | |||

Integrating hand gestures and head movements to control a robot avatar in virtual reality user interface. | Integrating hand gestures and head movements to control a robot avatar in virtual reality user interface. | ||

<hr> | |||

= | === Detection of hardware and software resources for smart integration of robots === | ||

== Detection of hardware and software resources for smart integration of robots == | |||

Vast majority of today’s robotic applications rely on hard-coded device and algorithm usage. This project focuses on developing a Resource Snooper software, that can detect addition or removal of resources for the benefit of dynamic reconfiguration of robotic systems. This project is developed as a subsystem of [https://utnuclearroboticspublic.github.io/temoto2/index.html TeMoto]. | Vast majority of today’s robotic applications rely on hard-coded device and algorithm usage. This project focuses on developing a Resource Snooper software, that can detect addition or removal of resources for the benefit of dynamic reconfiguration of robotic systems. This project is developed as a subsystem of [https://utnuclearroboticspublic.github.io/temoto2/index.html TeMoto]. | ||

<hr> | |||

= | === Human-Robot and Robot-Robot collaboration applications === | ||

== Human-Robot and Robot-Robot collaboration applications == | |||

Creating a demo or analysis of software capabilities related to human-robot or tobot-robot teams | Creating a demo or analysis of software capabilities related to human-robot or tobot-robot teams | ||

* human-robot collaborative assembly | * human-robot collaborative assembly | ||

| Line 113: | Line 94: | ||

* Inaccessible region teamwork | * Inaccessible region teamwork | ||

**youbot+drone - a drone maps the environment (for example a maze) and ground vehicle uses this information to traverse the maze | **youbot+drone - a drone maps the environment (for example a maze) and ground vehicle uses this information to traverse the maze | ||

**youbot+ | **youbot+robotont - youbot cannot go up ledges but it can lift smaller robot, such as robotont, up a ledge. | ||

<hr> | |||

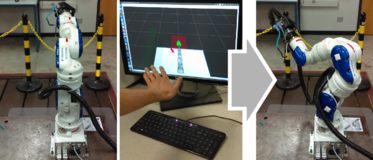

=== Mirroring human hand movements on industrial robots === | |||

The goal of this project is to integrate continuous control of industrial robot manipulator with a gestural telerobotics interface. The recommended tools for this thesis project are Leap Motion Controller or a standard web camera, Ultraleap, Universal Robot UR5 manipulator, and ROS. | |||

<hr> | |||

== | === ROBOTONT: TeMoto for robotont === | ||

Swarm-management and UMRF-based task loading for robotont using [https://github.com/temoto-framework TeMoto] framework. | |||

[ | <hr> | ||

== | === Enhancing teleoperation control interface with augmented cues to provoke caution=== | ||

The | The task is to create a telerobot control interface where video feed from the remote site and/or a mixed-reality scene is augmented with visual cues to provoke caution in human operator. | ||

<hr> | |||

== | === Robot-to-human interaction === | ||

As robots and autonomous machines start sharing the same space a humans, their actions need to be understood by the people occupying the same space. For instance, a human worker needs to understand what the robot partner is planning next or a pedestrian needs to clearly comprehend the behaviour of a driverless vehicle. To reduce the ambiguity, the robot needs mechanisms to convey its intent (whatever it is going to do next). The aim of the thesis is to outline existing methods for machines to convey their intent and develop a unified model interface for expressing that intent. | |||

<hr> | |||

== | === Gaze-based handover prediction === | ||

When human needs to pass an object to a robot manipulator, the robot must understand where in 3D space the object handover occurs and then plan an appropriate motion. Human gaze can be used as the input for predicting which object to track. This thesis activities involve camera-based eye tracking and safe motion-planning. | |||

<hr> | |||

== | === ROBOTONT: Human-height human-robot interface for Robotont ground robot === | ||

Robotont is an ankle-high flat mobile robot. For humans to interact with Robotont, there is a need for a compact and lightweight mechanical structure that is tall enough for comfortable human-robot interaction. The objective of the thesis is to develop the mechanical designs and build prototypes that ensure stable operation and meet the aesthetic requirements for use in public places. | |||

<hr> | |||

== | === Stratos Explore Ultraleap demonstrator for robotics === | ||

The aim of this thesis is to systematically analyze the strengths and limitations of the Stratos Explore Ultraleap device in the context of controlling a robot. Subsequently implement a demonstrator application for showing off its applicability for robot control. | |||

<hr> | |||

== | === Mixed-reality scene creation for vehicle teleoperation === | ||

Fusing different sensory feeds for creating high-usability teleoperation scene. | |||

<hr> | |||

=== Validation study for AR-based robot user-interfaces === | |||

Designing and carrying out a user study to validate the functionality and usability of an human-robot interface. | |||

<hr> | |||

== | === Quantitative Evaluation of the Situation Awareness during the Teleoperation of an Urban Vehicle === | ||

The goal of this thesis is to develop the methodology for measuring the operator's situation awareness while operating an autonomous urban vehicle. Potential metrics could include driving accuracy, speed, and latency. This thesis will be conducted as part of the activities at the [https://adl.cs.ut.ee Autonomous Driving Lab]. | |||

<hr> | |||

=== Sign-language-based control for robots === | |||

The goal of this thesis is to design and implement the means for interacting with a robot via conventional sign language, i.e. the human can command a robot by using sign language. | |||

<hr> | |||

=== LLM-based task planning for robots === | |||

The goal of this thesis is to enable high level task planning for autonomous robots for general purpose tasks. The thesis would leverage the LLM's reasoning and TeMoto Action Engine to achieve natural task negotiation, planning, and execution. | |||

<hr> | |||

=== ROBOTONT: Development of CoppeliaSim-based simulation and a digital twin === | |||

The objective of the thesis is to develop an easy to use simulation for Robotont gen3 using CoppeliaSim (https://www.coppeliarobotics.com/). | |||

<hr> | |||

=== Application of VLA (Vision-Language-Action) models in robotics === | |||

The goal of this thesis is to apply VLA models to real-world robotics. The thesis project will involve topics related to robots, machine learning, transformers. | |||

<hr> | |||

= Completed projects = | = Completed projects = | ||

== | == PhD theses == | ||

*Madis K Nigol, [http://hdl.handle.net/10062/64339 Õppematerjalid robotplatvormile Robotont | *Robert Valner, [https://hdl.handle.net/10062/105994 Design of TeMoto, a software framework for dependable, adaptive, and collaborative autonomous robots] [TeMoto – töökindlate, adaptiivsete ja koostöövõimeliste autonoomsete robotite arendamise tarkvararaamistik], PhD thesis, 2024 | ||

*Renno Raudmäe, [http://hdl.handle.net/10062/64341 Avatud robotplatvorm Robotont | *Houman Masnavi, [https://hdl.handle.net/10062/91394 Visibility aware navigation] [Nähtavust arvestav navigatsioon], PhD thesis, 2023 | ||

*Asif Sattar, [http://hdl.handle.net/10062/64352 Human detection and distance estimation with monocular camera using YOLOv3 neural network | |||

*Ragnar Margus, [http://hdl.handle.net/10062/64337 Kergliiklusvahendite jagamisteenuseks vajaliku positsioneerimismooduli loomine ja uurimine | == Master's theses == | ||

*Pavel Šumejko, [http://hdl.handle.net/10062/64320 Robust Solution for Extrinsic Calibration of a 2D Laser-Rangefinder and a Monocular USB Camera], MS thesis, 2019 | *Asier Mandiola Arrizabalaga, Haptic-Based Teleoperation of Robots, MS thesis, 2025 | ||

*Dzvezdana Arsovska, [http://hdl.handle.net/10062/64321 Building an Efficient and Secure Software Supply Pipeline for Aerial Robotics Application], MS thesis, 2019 | *Dāvis Krūminš, Web-based Robotics Lab for Effortless ROS2 Development, MS thesis, 2025 | ||

*Tõnis Tiimus, [http://hdl.handle.net/10062/60713 Visuaalselt esilekutsutud potentsiaalidel põhinev aju-arvuti liides robootika rakendustes | *Julian Rene Leclerc, Natural Language Human-Robot Interaction: A Modular Framework for Conversational Robot Control Using Large Language Models, MS thesis, 2025 | ||

*Martin Appo, [http://hdl.handle.net/10062/60718 Hardware-agnostic compliant control ROS package for collaborative industrial manipulators | *Miriam Calafa’, Designing Multimodal Emotional Expression for a Robotic Study Companion, MS thesis, 2025 | ||

*Hassan Mahmoud Shehawy Elhanash, [http://hdl.handle.net/10062/60711 Optical Tracking of Forearm for Classifying Fingers Poses | *Iryna Hurova, Model-based Planning Using GPU-accelerated Simulator as a World Model, MS thesis, 2025 | ||

*Sander Toma Võrk, Elektrooniliste termomeetrite automaatse kalibreerimissüsteemi väljatöötamine ja rakendamine, MS thesis, 2025 | |||

*Carl Hjalmar Love Hult, SurfMotion: An Open Source Pipeline for Robotic Pipe Cutting and Welding, MS thesis, 2025 | |||

*Paola Avalos Conchas, Socially Aware Planning for Indoor Navigation, MS thesis, 2025 | |||

*Robert Allik, Validation of NoMaD as a Global Planner for Mobile Robots, MS thesis, 2024 | |||

*Rauno Põlluäär, [https://comserv.cs.ut.ee/ati_thesis/datasheet.php?id=79393 Designing and Implementing a Bird’s-eye View Interface for a Self-driving Vehicle’s Teleoperation System] [Isejuhtiva sõiduki kaugjuhtimissüsteemile linnuvaate kasutajaliidese loomine], MS thesis, 2024 | |||

*Gautier Reynes, VR-Enhanced Remote Inspection Framework for Semi-Autonomous Robot Fleet, MS thesis, 2024 | |||

*Eva Mõtshärg, [https://comserv.cs.ut.ee/ati_thesis/datasheet.php?id=77385 3D-prinditava kere disain ja analüüs vabavaralisele haridusrobotile Robotont] [Design and Analysis of a 3D Printable Chassis for the Open Source Educational Robot Robotont], MS thesis, 2023 | |||

*Farnaz Baksh, [https://dspace.ut.ee/items/a7a9cc15-27e9-450c-94e8-3267f0c95c56 An Open-source Robotic Study Companion for University Students] [Avatud lähtekoodiga robotõpikaaslane üliõpilastele], MS thesis, 2023 | |||

*Igor Rybalskii, [http://hdl.handle.net/10062/83028 Augmented reality (AR) for enabling human-robot collaboration with ROS robots] [Liitreaalsus inimese ja roboti koostöö võimaldamiseks ROS-i robotitega], MS thesis, 2022 | |||

*Md. Maniruzzaman, [http://hdl.handle.net/10062/83025 Object search and retrieval in indoor environment using a Mobile Manipulator] [Objektide otsimine ja teisaldamine siseruumides mobiilse manipulaatori abil], MS thesis, 2022 | |||

*Allan Kustavus, [http://hdl.handle.net/10062/72651 Design and Implementation of a Generalized Resource Management Architecture in the TeMoto Software Framework] [Üldise ressursihalduri disain ja teostus TeMoto tarkvara raamistikule], MS thesis, 2021 | |||

*Kristina Meister, [http://hdl.handle.net/10062/72350 External human-vehicle interaction - a study in the context of an autonomous ride-hailing service], MS thesis, 2021 | |||

*Muhammad Usman, [http://hdl.handle.net/10062/72126 Development of an Optimization-Based Motion Planner and Its ROS Interface for a Non-Holonomic Mobile Manipulator] [Optimeerimisele baseeruva liikumisplaneerija arendamine ja selle ROSi liides mitteholonoomse mobiilse manipulaatori jaoks], MS thesis, 2020 | |||

*Maarika Oidekivi, [http://hdl.handle.net/10062/72119 Masina kavatsuse väljendamine ja tõlgendamine] [Communicating and interpreting machine intent], MS thesis, 2020 | |||

*Houman Masnavi, [http://hdl.handle.net/10062/72118 Multi-Robot Motion Planning for Shared Payload Transportation] [Rajaplaneerimine multi-robot süsteemile jagatud lasti transportimisel], MS thesis, 2020 | |||

*Fabian Ernesto Parra Gil, [http://hdl.handle.net/10062/72112 Implementation of Robot Manager Subsystem for Temoto Software Framework] [Robotite Halduri alamsüsteemi väljatöötamine tarkvararaamistikule TEMOTO], MS thesis, 2020 | |||

*Zafarullah, [http://hdl.handle.net/10062/72125 Gaze Assisted Neural Network based Prediction of End-Point of Human Reaching Trajectories], MS thesis, 2020 | |||

*Madis K Nigol, [http://hdl.handle.net/10062/64339 Õppematerjalid robotplatvormile Robotont] [Study materials for robot platform Robotont], MS thesis, 2019 | |||

*Renno Raudmäe, [http://hdl.handle.net/10062/64341 Avatud robotplatvorm Robotont] [Open source robotics platform Robotont], MS thesis, 2019 | |||

*Asif Sattar, [http://hdl.handle.net/10062/64352 Human detection and distance estimation with monocular camera using YOLOv3 neural network] [Inimeste tuvastamine ning kauguse hindamine kasutades kaamerat ning YOLOv3 tehisnärvivõrku], MS thesis, 2019 | |||

*Ragnar Margus, [http://hdl.handle.net/10062/64337 Kergliiklusvahendite jagamisteenuseks vajaliku positsioneerimismooduli loomine ja uurimine] [Develoment and testing of a IoT module for electric vehicle sharing service], MS thesis, 2019 | |||

*Pavel Šumejko, [http://hdl.handle.net/10062/64320 Robust Solution for Extrinsic Calibration of a 2D Laser-Rangefinder and a Monocular USB Camera] [Meetod 2D laserkaugusmõõdiku ja USB-kaamera väliseks kalibreerimiseks], MS thesis, 2019 | |||

*Dzvezdana Arsovska, [http://hdl.handle.net/10062/64321 Building an Efficient and Secure Software Supply Pipeline for Aerial Robotics Application] [Efektiivne ja turvaline tarkvaraarendusahel lennurobootika rakenduses], MS thesis, 2019 | |||

*Tõnis Tiimus, [http://hdl.handle.net/10062/60713 Visuaalselt esilekutsutud potentsiaalidel põhinev aju-arvuti liides robootika rakendustes] [A VEP-based BCI for robotics applications], MS thesis, 2018 | |||

*Martin Appo, [http://hdl.handle.net/10062/60718 Hardware-agnostic compliant control ROS package for collaborative industrial manipulators] [Riistvarapaindlik ROSi tarkvarapakett tööstuslike robotite mööndlikuks juhtimiseks], MS thesis, 2018 | |||

*Hassan Mahmoud Shehawy Elhanash, [http://hdl.handle.net/10062/60711 Optical Tracking of Forearm for Classifying Fingers Poses] [Küünarvarre visuaalne jälgimine ennustamaks sõrmede asendeid], MS thesis, 2018 | |||

== Bachelor's theses == | == Bachelor's theses == | ||

*Meelis Pihlap, [http://hdl.handle.net/10062/64292 Mitme roboti koostöö funktsionaalsuste väljatöötamine tarkvararaamistikule TeMoto | *Kaarel-Richard Kaarelson, [https://thesis.cs.ut.ee/12b3ed0c-b3c1-473e-ae13-5063fde9850a TeMoto Action Assistant: A Web-Based Human–Robot Interface for Designing UMRF Graphs] [TeMoto Action Assistant: Veebipõhine Inimese ja Roboti Interaktsiooni Tööriist UMRF Graafide Loomiseks], BS thesis, 2025 | ||

*Kaarel Mark, [http://hdl.handle.net/10062/64290 Liitreaalsuse kasutamine tootmisprotsessis asukohtade määramisel | *Oliver Voorel, Humanoidrobot Semuboti toitesüsteemi uuendamine, BS thesis, 2025 | ||

*Kätriin Julle, [http://hdl.handle.net/10062/64279 Roboti KUKA youBot riistvara ja ROS-tarkvara uuendamine | *Karl Rahn, Millimeeterlaine radari integreerimine avatud lähtekoodiga muruniiduki platvormil Open Mower, BS thesis, 2025 | ||

*Georg Astok, [http://hdl.handle.net/10062/64274 Roboti juhtimine virtuaalreaalsuses kasutades ROS-raamistikku | *Mattias Mäe, Sõiduki kaugjuhtimise viivituse mõõtmine, BS thesis, 2025 | ||

*Martin Hallist, [http://hdl.handle.net/10062/64275 Robotipõhine kaugkohalolu käte liigutuste ülekandmiseks | *Jürgen Kottise, Dockeri konteineritel põhinev haldustarkvara Robotont 3 õpperobotile, BS thesis, 2025 | ||

*Ahmed | *Martin Kaur, Servodel põhinev sotsiaalse robotkäe süsteem SemuBotile, BS thesis, 2025 | ||

*Kristo Allaje, [http://hdl.handle.net/10062/60294 Draiveripakett käte jälgimise seadme Leap Motion™ kontroller kasutamiseks robootika arendusplatvormil ROS], BS thesis, 2018 | *Karl-Jürgen Siilak, Mass Portal Grand Pharaoh XD 3D-printeri uuendamine, BS thesis, 2025 | ||

*Martin Maidla, [http://hdl.handle.net/10062/60288 Avatud robotiarendusplatvormi Robotont omniliikumise ja odomeetria arendamine], BS thesis, 2018 | *Usman Ali Khan, Graphical programming interface for ROBOTONT, an open-source educational robot, BS thesis, 2025 | ||

*Raid Vellerind, [http://hdl.handle.net/10062/56559 Avatud robotiarendusplatvormi ROS võimekuse loomine Tartu Ülikooli Robotexi robootikaplatvormile], BS thesis, 2017 | *Robina Zvirgzdina, Development of an Autonomous Open-Source Inventory Performance Robot for the University of Tartu Library, BS thesis, 2025 | ||

*Omar Huseynli, ROS integration for the Semubot robot, BS thesis, 2025 | |||

*Albert Unn, [https://comserv.cs.ut.ee/ati_thesis/datasheet.php?id=79937 Suhtlusvõimekuse arendamine sotsiaalsele humanoidrobotile SemuBot] [Developing the ability to communicate for SemuBot, a social humanoid robot], BS thesis, 2024 | |||

*Veronika-Marina Volynets, [https://hdl.handle.net/10062/99943 Development of Control Electronics and Program for Robotont's Height Adjustment Mechanism] [Juhtelektroonika ja programmi väljatöötamine Robotondi kõrguse reguleerimise mehhanismile], BS thesis, 2024 | |||

*Nikita Kurenkov, [https://hdl.handle.net/10062/99880 Flexible Screen Integration and Development of Neck Movement Mechanism for Social Humanoid Robot SemuBot] [Humanoidroboti näo lahenduse leidmine ja rakendamine; humanoidroboti jaoks kaela mehhanismi väljatöötamine], BS thesis, 2024 | |||

*Elchin Huseynov, [https://hdl.handle.net/10062/99668 Design and Control of a Social Humanoid Robot - SemuBot’s Hand] [Sotsiaalse Humanoidroboti Disain ja Juhtimine - SemuBoti Käsi], BS thesis, 2024 | |||

*Veronika Podliesnova, [https://hdl.handle.net/10062/99636 Real-Time Detection of Robot Failures by Monitoring Operator’s Brain Activity with EEG-based Brain-Computer Interface] [Reaalajas Robotirikke Tuvastamine Operaatori Ajutegevuse Jälgimise teel EEG-põhise Aju-Arvuti Liidese abil], BS thesis, 2024 | |||

*Märten Josh Peedimaa, [https://hdl.handle.net/not-yet Rhea: An Open-Source Table Tennis Ball Launcher Robot for Multiball Training] [Avatud lähtekoodiga lauatennise palliviske robot mitmikpall treenimiseks], BS thesis, 2024 | |||

*Karl Sander Vinkel, [https://hdl.handle.net/not-yet Laadimisjaama ja transportkesta väljatöötamine õpperobotile Robotont] [Development of charging dock and transport case for Robotont], BS thesis, 2024 | |||

*Raimo Köidam, [https://hdl.handle.net/not-yet Valguslahenduse tarkvara väljatöötamine õpperobotile Robotont] [Development of light solution software for the educational robot Robotont], BS thesis, 2024 | |||

*Robert Valge, [https://hdl.handle.net/not-yet Toitepinge ja tarbevoolu monitoorimine ning toitehalduse püsivara loomine õpperobotil Robotont] [Monitoring supply voltage and current consumption and creating firmware for Robotont’s power management system], BS thesis, 2024 | |||

*Leonid Tšigrinski, [https://hdl.handle.net/not-yet Õpperoboti Robotont püsivara arhitektuuri uuendamine] [Education robot "Robotont" firmware architecture updating], BS thesis, 2024 | |||

*Andres Sakk, [https://hdl.handle.net/not-yet Avatud robotplatvormi Robotont 3 kasutajaliidese väljatöötamine] [Development of a user interface for the open robotics platform Robotont 3], BS thesis, 2024 | |||

*Kaur Kullamäe, [https://hdl.handle.net/not-yet Elektroonikalahendus ja püsivara robotkäe juhtimiseks sotsiaalsel humanoidrobotil SemuBot] [Building the SemuBot arm electronics system], BS thesis, 2024 | |||

*Kristjan Madis Kask, [https://hdl.handle.net/not-yet Käe mehaanika disain sotsiaalsele humanoidrobotile SemuBot] [Arm mechanics design for social humanoid robot SemuBot], BS thesis, 2024 | |||

*Sven-Ervin Paap, [https://hdl.handle.net/not-yet ROS2 draiver õpperobotile Robotont] [ROS2 driver for the educational robot Robotont], BS thesis, 2024 | |||

*Timur Nizamov, [https://hdl.handle.net/10062/99592 Audio System for the Social Humanoid Robot SemuBot] [Helisüsteem sotsiaalsele humanoidrobotile SemuBot], BS thesis, 2024 | |||

*Georgs Narbuts, [https://hdl.handle.net/10062/99554 Holonomic Motion Drive System of a Social Humanoid Robot SemuBot] [Sotsiaalse humanoidroboti SemuBoti holonoomne liikuv ajamisüsteem], BS thesis, 2024 | |||

*Iryna Hurova, [https://hdl.handle.net/10062/90638 Kitting station of the learning factory] [Õppetehase komplekteerimisjaam], BS thesis, 2023 | |||

*Paola Avalos Conchas, [https://hdl.handle.net/10062/90641 Payload transportation system of a learning factory] [Õppetehase kasuliku koorma transpordisüsteem], BS thesis, 2023 | |||

*Pille Pärnalaas, [https://hdl.handle.net/10062/93431 Pöördpõik ajami arendus robotplatvormile Robotont] [Development of swerve drive for robotic platform Robotont], BS thesis, 2023 | |||

*Carl Hjalmar Love Hult, [https://hdl.handle.net/10062/90673 Using and Evaluating the Real-time Spatial Perception System Hydra in Real-world Scenarios] [Reaalajas toimiva ruumilise taju süsteemi Hydra kasutamine ja hindamine praktilistes stsenaariumides], BS thesis, 2023 | |||

*Priit Rooden, [https://hdl.handle.net/10062/93432 Autonoomse laadimislahenduse väljatöötamine õpperobotile Robotont] [Development of an autonomous charging solution for the robot platform Robotont], BS thesis, 2023 | |||

*Marko Muro, [https://hdl.handle.net/10062/93429 Robotondi akulahenduse ning 12 V pingeregulaatori prototüüpimine] [Prototyping battery solution and 12 V voltage regulator for Robotont], BS thesis, 2023 | |||

*Danel Leppenen, [https://hdl.handle.net/10062/93427 Nav2 PYIF: Python-based Motion Planning for ROS 2 Navigation 2] [Nav 2 PYIF: Pythoni põhine liikumise planeerija ROS 2 Navigation 2-le], BS thesis, 2023 | |||

*Kertrud Geddily Küüt, [https://hdl.handle.net/10062/93424 Kõrgust reguleeriv mehhanism Robotondile] [Height adjusting mechanism for Robotont], BS thesis, 2023 | |||

*Ingvar Drikkit, [https://hdl.handle.net/10062/93421 Lisaseadmete võimekuse arendamine haridusrobotile Robotont] [Developing add-on device support for the educational robot Robotont], BS thesis, 2023 | |||

*Kristo Pool, [https://hdl.handle.net/10062/93430 MoveIt 2 õppematerjalid] [Learning materials for MoveIt 2], BS thesis, 2023 | |||

*Erki Veeväli, [https://hdl.handle.net/10062/93434 Development of a Continuous Teleoperation System for Urban Road Vehicle] [Linnasõiduki pideva kaugjuhtimissüsteemi arendus], BS thesis, 2023 | |||

*Aleksandra Doroshenko, [https://hdl.handle.net/10062/93420 Haiglates inimesi juhatava roboti disain] [Hospital guide robot design], BS thesis, 2023 | |||

*Hui Shi, [http://hdl.handle.net/10062/83043 Expanding the Open-source ROS Software Pack age opencv_apps with Dedicated Blob Detection Functionality] [Avatud lähtekoodiga ROS-i tarkvarakimbu opencv_apps laiendamine laigutuvasti funktsioonaalsusega], BS thesis, 2022 | |||

*Dāvis Krūmiņš, [http://hdl.handle.net/10062/83040 Web-based learning and software development environment for remote access of ROS robots] [Veebipõhine õppe- ja tarkvaraarenduse keskkond ROS robotite juurdepääsuks kaugteel], BS thesis, 2022 | |||

*Anna Jakovleva, [http://hdl.handle.net/10062/83037 Roboquiz - an interactive human-robot game] [Roboquiz - interaktiivne inimese ja roboti mäng], BS thesis, 2022 | |||

*Kristjan Laht, [https://comserv.cs.ut.ee/ati_thesis/datasheet.php?id=74702&year=2022Robot Localization with Fiducial Markers] [Roboti lokaliseerimine koordinaatmärkidega], BS thesis, 2022 | |||

*Hans Pärtel Pani, [http://hdl.handle.net/10062/83008 ROS draiver pehmerobootika haaratsile] [ROS Driver for Soft Robotic Gripper], BS thesis, 2022 | |||

*Markus Erik Sügis, [http://hdl.handle.net/10062/83015 Jagatud juhtimise põhimõttel realiseeritud robotite kaugjuhtimissüsteem] [A continuous teleoperating system based on shared control concept], BS thesis, 2022 | |||

*Taaniel Küla, [http://hdl.handle.net/10062/83007 ROS2 platvormile keevitusroboti tarkvara portotüübi loomine kasutades UR5 robot-manipulaatorit] [Welding robot software prototype for ROS2 using UR5 robot arm], BS thesis, 2022 | |||

*Rauno Põlluäär, [http://hdl.handle.net/10062/72672 Veebirakendus-põhine kasutajaliides avatud robotplatvormi Robotont juhtimiseks ja haldamiseks] [Web application-based user interface for controlling and managing open-source robotics platform Robotont], BS thesis, 2021 | |||

*Hendrik Olesk, [http://hdl.handle.net/10062/72664 Nägemisulatuses kaugjuhitava mobiilse robotmanipulaatori kasutajamugavuse tõstmine] [Improving the usability of a mobile manipulator robot for line-of-sight remote control], BS thesis, 2021 | |||

*Tarvi Tepandi, [http://hdl.handle.net/10062/72665 Segareaalsusel põhinev kasutajaliides mobiilse roboti kaugjuhtimiseks Microsoft HoloLens 2 vahendusel] [Mixed-reality user interface for teleoperating mobile robots with Microsoft HoloLens 2], BS thesis, 2021 | |||

*Rudolf Põldma, [http://hdl.handle.net/10062/72674 Tartu linna Narva maantee ringristmiku digikaksik] [Digital twin for Narva street roundabout in Tartu], BS thesis, 2021 | |||

*Kwasi Akuamoah Boateng, [http://hdl.handle.net/10062/72804 Digital Twin of a Teaching and Learning Robotics Lab] [Robotite õpetamise ja õppimise labori digitaalne kaksik], BS thesis, 2021 | |||

*Karina Sein, [http://hdl.handle.net/10062/72102 Eestikeelse kõnesünteesi võimaldamine robootika arendusplatvormil ROS] [Enabling Estonian speech synthesis on the Robot Operating System (ROS)], BS thesis, 2020 | |||

*Ranno Mäesepp, [http://hdl.handle.net/10062/72100 Takistuste vältimise lahendus õpperobotile Robotont] [Obstacle avoidance solution for educational robot platform Robotont], BS thesis, 2020 | |||

*Igor Rybalskii, [http://hdl.handle.net/10062/72060 Gesture Detection Software for Human-Robot Collaboration] [Žestituvastus tarkvara inimese ja roboti koostööks], BS thesis, 2020 | |||

*Meelis Pihlap, [http://hdl.handle.net/10062/64292 Mitme roboti koostöö funktsionaalsuste väljatöötamine tarkvararaamistikule TeMoto] [Multi-robot collaboration functionalities for robot software development framework TeMoto], BS thesis, 2019 | |||

*Kaarel Mark, [http://hdl.handle.net/10062/64290 Liitreaalsuse kasutamine tootmisprotsessis asukohtade määramisel] [Augmented reality for location determination in manufacturing], BS thesis, 2019 | |||

*Kätriin Julle, [http://hdl.handle.net/10062/64279 Roboti KUKA youBot riistvara ja ROS-tarkvara uuendamine] [Upgrading robot KUKA youBot’s hardware and ROS-software], BS thesis, 2019 | |||

*Georg Astok, [http://hdl.handle.net/10062/64274 Roboti juhtimine virtuaalreaalsuses kasutades ROS-raamistikku] [Creating virtual reality user interface using only ROS framework], BS thesis, 2019 | |||

*Martin Hallist, [http://hdl.handle.net/10062/64275 Robotipõhine kaugkohalolu käte liigutuste ülekandmiseks] [Teleoperation robot for arms motions], BS thesis, 2019 | |||

*Ahmed Hassan Helmy Mohamed, [http://hdl.handle.net/10062/63946 Software integration of autonomous robot system for mixing and serving drinks] [Jooke valmistava ja serveeriva robotsüsteemi tarkvaralahendus], BS thesis, 2019 | |||

*Kristo Allaje, [http://hdl.handle.net/10062/60294 Draiveripakett käte jälgimise seadme Leap Motion™ kontroller kasutamiseks robootika arendusplatvormil ROS] [Driver package for using Leap Motion™ controller on the robotics development platform ROS], BS thesis, 2018 | |||

*Martin Maidla, [http://hdl.handle.net/10062/60288 Avatud robotiarendusplatvormi Robotont omniliikumise ja odomeetria arendamine] [Omnimotion and odometry development for open robot development platform Robotont], BS thesis, 2018 | |||

*Raid Vellerind, [http://hdl.handle.net/10062/56559 Avatud robotiarendusplatvormi ROS võimekuse loomine Tartu Ülikooli Robotexi robootikaplatvormile] [ROS driver development for the University of Tartu’s Robotex robotics platform], BS thesis, 2017 | |||

*Kalle-Gustav Kruus, [http://hdl.handle.net/10062/32440 Jalgpalliroboti löögimehhanismi elektroonikalahendus] [Driver circuit for a kicking mechanism of a football robot], BS thesis, 2013 | |||

*Sven Kautlenbach, [http://hdl.handle.net/10062/25570 Autonoomne seade elastsusmooduli mõõtmiseks] [Electronic device for Young’s modulus measurements], BS thesis, 2011 | |||

[[Category:Theses Topics]] | |||

Latest revision as of 15:04, 10 September 2025

Projects in Advanced Robotics

The main objective of the follwing projects is to give students experience in working with advanced robotics tehcnology. Our group is active in several R&D projects involving human-robot collaboration, intuitive teleoperation of robots, and autonomous navigation of unmanned mobile platforms. Our main software platforms are Robot Operating System (ROS) for developing software for advanced robot systems and Gazebo for running realistic robotic simulations.

For further information, contact Karl Kruusamäe.

The following is not an exhaustive list of all available thesis/research topics.

Highlighted theses topics for 2025/2026 study year

- Application of VLA (Vision-Language-Action) models in robotics

- LLM-based task planning for robots

- ROBOTONT: analysis of different options as on-board computers

- SemuBOT: multiple topics

- ROBOTONT: integrating a graphical programming interface

- Sign-language-based control for robots

- Robotic Study Companion: a social robot for students in higher education

- Quantitative Evaluation of the Situation Awareness during the Teleoperation of an Urban Vehicle

List of potential thesis topics

Our inventory includes but is not limited to:

ROBOTONT: Docker-Driven ROS Environment Switching

This thesis focuses on integrating Docker containers to manage and switch between different ROS (Robot Operating System) environments on Robotont. Currently, ROS is installed natively on Robotont's Ubuntu-based onboard computer, limiting flexibility in system recovery and configuration switching. The goal is to develop a Docker-based solution that allows users to switch between ROS environments directly from Robotont’s existing low-level menu interface.

ROBOTONT: designing and implementing a communication protocol for additional devices

Robotont currently includes a designated area on its front for attaching additional devices, such as an ultrasonic range finder, a servo motor connected to an Arduino, or a MikroBUS device. This thesis aims to design and implement a standard communication protocol that will enable seamless integration and control of these devices through the high-level ROS framework running on Robotont’s onboard computer.

ROBOTONT: analysis of different options as on-board computers

Currently ROBOTONT uses Intel NUC as an onboard computer. The goal of this thesis is to validate Robotont's software stack on alternative compute devices (e.g. Raspi4, Intel Compute Stick, and NVIDIA Jetson Nano) and benchmark their performance for the most popular Robotont use-case demos (e.g. webapp teleop, ar-tag steering, 2D mapping, and 3D mapping). The objetive is to propose at least two different compute solutions: one that optimizes cost and another that optimizes for the performance.

ROBOTONT Lite

The goal of this thesis is to optimize ROBOTONT platform for cost by replacing the onboard compute and sensor with low-cost alternatives but ensuring ROS/ROS2 software compatibility.

ROBOTONT: integrating a graphical programming interface

The goal of this thesis is to integrate graphical programming solution (e.g. Scratch or Blockly) to enable programming of Robotont by non-experts. E.g. https://doi.org/10.48550/arXiv.2011.13706

SemuBOT: multiple topics

In 2023/2024 many topics are developed to support the development of open-source humanoid robot project SemuBOT (https://www.facebook.com/semubotmtu/)

Robotic Study Companion: a social robot for students in higher education

Potential Topics:

- Enhance the Robot's Speech/Natural Language Capabilities

- Build a Local Language Model for the RSC

- Develop and Program the Robot’s Behavior and Personality

- Build a Digital Twin Simulation for Multimodal Interaction

- Explore the Use of the RSC as an Affective Robot to Address Students’ Academic Emotions

- Explore and Implement Cybersecurity Measures for Social Robot

More info on Github | reach out to farnaz.baksh@ut.ee for more info

Virtual reality user interface (VRUI) for intuitive teleoperation system

Enhancing the user-experience of a virtual reality UI developed by Georg Astok. Potentially adding virtual reality capability to a gesture- and natural-language-based robot teleoperation system.

Modeling humans for human-robot interaction

True human-robot collaboration means that the robot must understand the actions, intention, and state of its human partner. This work invovlves using cameras and other human sensors for digitally representing and modelling humans. There are multiple stages for modeling: a) physical models of human kinematics and dynamics; b) higher level-models for recognizing human intent.

Robotic avatar for telepresence

Integrating hand gestures and head movements to control a robot avatar in virtual reality user interface.

Detection of hardware and software resources for smart integration of robots

Vast majority of today’s robotic applications rely on hard-coded device and algorithm usage. This project focuses on developing a Resource Snooper software, that can detect addition or removal of resources for the benefit of dynamic reconfiguration of robotic systems. This project is developed as a subsystem of TeMoto.

Human-Robot and Robot-Robot collaboration applications

Creating a demo or analysis of software capabilities related to human-robot or tobot-robot teams

- human-robot collaborative assembly

- distributed mapping; analysis and demo of existing ROS (e.g., segmap https://youtu.be/JJhEkIA1xSE) packages for multi-robot mapping

- Inaccessible region teamwork

- youbot+drone - a drone maps the environment (for example a maze) and ground vehicle uses this information to traverse the maze

- youbot+robotont - youbot cannot go up ledges but it can lift smaller robot, such as robotont, up a ledge.

Mirroring human hand movements on industrial robots

The goal of this project is to integrate continuous control of industrial robot manipulator with a gestural telerobotics interface. The recommended tools for this thesis project are Leap Motion Controller or a standard web camera, Ultraleap, Universal Robot UR5 manipulator, and ROS.

ROBOTONT: TeMoto for robotont

Swarm-management and UMRF-based task loading for robotont using TeMoto framework.

Enhancing teleoperation control interface with augmented cues to provoke caution

The task is to create a telerobot control interface where video feed from the remote site and/or a mixed-reality scene is augmented with visual cues to provoke caution in human operator.

Robot-to-human interaction

As robots and autonomous machines start sharing the same space a humans, their actions need to be understood by the people occupying the same space. For instance, a human worker needs to understand what the robot partner is planning next or a pedestrian needs to clearly comprehend the behaviour of a driverless vehicle. To reduce the ambiguity, the robot needs mechanisms to convey its intent (whatever it is going to do next). The aim of the thesis is to outline existing methods for machines to convey their intent and develop a unified model interface for expressing that intent.

Gaze-based handover prediction

When human needs to pass an object to a robot manipulator, the robot must understand where in 3D space the object handover occurs and then plan an appropriate motion. Human gaze can be used as the input for predicting which object to track. This thesis activities involve camera-based eye tracking and safe motion-planning.

ROBOTONT: Human-height human-robot interface for Robotont ground robot

Robotont is an ankle-high flat mobile robot. For humans to interact with Robotont, there is a need for a compact and lightweight mechanical structure that is tall enough for comfortable human-robot interaction. The objective of the thesis is to develop the mechanical designs and build prototypes that ensure stable operation and meet the aesthetic requirements for use in public places.

Stratos Explore Ultraleap demonstrator for robotics

The aim of this thesis is to systematically analyze the strengths and limitations of the Stratos Explore Ultraleap device in the context of controlling a robot. Subsequently implement a demonstrator application for showing off its applicability for robot control.

Mixed-reality scene creation for vehicle teleoperation

Fusing different sensory feeds for creating high-usability teleoperation scene.

Validation study for AR-based robot user-interfaces

Designing and carrying out a user study to validate the functionality and usability of an human-robot interface.

Quantitative Evaluation of the Situation Awareness during the Teleoperation of an Urban Vehicle

The goal of this thesis is to develop the methodology for measuring the operator's situation awareness while operating an autonomous urban vehicle. Potential metrics could include driving accuracy, speed, and latency. This thesis will be conducted as part of the activities at the Autonomous Driving Lab.

Sign-language-based control for robots

The goal of this thesis is to design and implement the means for interacting with a robot via conventional sign language, i.e. the human can command a robot by using sign language.

LLM-based task planning for robots

The goal of this thesis is to enable high level task planning for autonomous robots for general purpose tasks. The thesis would leverage the LLM's reasoning and TeMoto Action Engine to achieve natural task negotiation, planning, and execution.

ROBOTONT: Development of CoppeliaSim-based simulation and a digital twin

The objective of the thesis is to develop an easy to use simulation for Robotont gen3 using CoppeliaSim (https://www.coppeliarobotics.com/).

Application of VLA (Vision-Language-Action) models in robotics

The goal of this thesis is to apply VLA models to real-world robotics. The thesis project will involve topics related to robots, machine learning, transformers.

Completed projects

PhD theses

- Robert Valner, Design of TeMoto, a software framework for dependable, adaptive, and collaborative autonomous robots [TeMoto – töökindlate, adaptiivsete ja koostöövõimeliste autonoomsete robotite arendamise tarkvararaamistik], PhD thesis, 2024

- Houman Masnavi, Visibility aware navigation [Nähtavust arvestav navigatsioon], PhD thesis, 2023

Master's theses

- Asier Mandiola Arrizabalaga, Haptic-Based Teleoperation of Robots, MS thesis, 2025

- Dāvis Krūminš, Web-based Robotics Lab for Effortless ROS2 Development, MS thesis, 2025

- Julian Rene Leclerc, Natural Language Human-Robot Interaction: A Modular Framework for Conversational Robot Control Using Large Language Models, MS thesis, 2025

- Miriam Calafa’, Designing Multimodal Emotional Expression for a Robotic Study Companion, MS thesis, 2025

- Iryna Hurova, Model-based Planning Using GPU-accelerated Simulator as a World Model, MS thesis, 2025

- Sander Toma Võrk, Elektrooniliste termomeetrite automaatse kalibreerimissüsteemi väljatöötamine ja rakendamine, MS thesis, 2025

- Carl Hjalmar Love Hult, SurfMotion: An Open Source Pipeline for Robotic Pipe Cutting and Welding, MS thesis, 2025

- Paola Avalos Conchas, Socially Aware Planning for Indoor Navigation, MS thesis, 2025

- Robert Allik, Validation of NoMaD as a Global Planner for Mobile Robots, MS thesis, 2024

- Rauno Põlluäär, Designing and Implementing a Bird’s-eye View Interface for a Self-driving Vehicle’s Teleoperation System [Isejuhtiva sõiduki kaugjuhtimissüsteemile linnuvaate kasutajaliidese loomine], MS thesis, 2024

- Gautier Reynes, VR-Enhanced Remote Inspection Framework for Semi-Autonomous Robot Fleet, MS thesis, 2024

- Eva Mõtshärg, 3D-prinditava kere disain ja analüüs vabavaralisele haridusrobotile Robotont [Design and Analysis of a 3D Printable Chassis for the Open Source Educational Robot Robotont], MS thesis, 2023

- Farnaz Baksh, An Open-source Robotic Study Companion for University Students [Avatud lähtekoodiga robotõpikaaslane üliõpilastele], MS thesis, 2023

- Igor Rybalskii, Augmented reality (AR) for enabling human-robot collaboration with ROS robots [Liitreaalsus inimese ja roboti koostöö võimaldamiseks ROS-i robotitega], MS thesis, 2022

- Md. Maniruzzaman, Object search and retrieval in indoor environment using a Mobile Manipulator [Objektide otsimine ja teisaldamine siseruumides mobiilse manipulaatori abil], MS thesis, 2022

- Allan Kustavus, Design and Implementation of a Generalized Resource Management Architecture in the TeMoto Software Framework [Üldise ressursihalduri disain ja teostus TeMoto tarkvara raamistikule], MS thesis, 2021

- Kristina Meister, External human-vehicle interaction - a study in the context of an autonomous ride-hailing service, MS thesis, 2021

- Muhammad Usman, Development of an Optimization-Based Motion Planner and Its ROS Interface for a Non-Holonomic Mobile Manipulator [Optimeerimisele baseeruva liikumisplaneerija arendamine ja selle ROSi liides mitteholonoomse mobiilse manipulaatori jaoks], MS thesis, 2020

- Maarika Oidekivi, Masina kavatsuse väljendamine ja tõlgendamine [Communicating and interpreting machine intent], MS thesis, 2020

- Houman Masnavi, Multi-Robot Motion Planning for Shared Payload Transportation [Rajaplaneerimine multi-robot süsteemile jagatud lasti transportimisel], MS thesis, 2020

- Fabian Ernesto Parra Gil, Implementation of Robot Manager Subsystem for Temoto Software Framework [Robotite Halduri alamsüsteemi väljatöötamine tarkvararaamistikule TEMOTO], MS thesis, 2020

- Zafarullah, Gaze Assisted Neural Network based Prediction of End-Point of Human Reaching Trajectories, MS thesis, 2020

- Madis K Nigol, Õppematerjalid robotplatvormile Robotont [Study materials for robot platform Robotont], MS thesis, 2019

- Renno Raudmäe, Avatud robotplatvorm Robotont [Open source robotics platform Robotont], MS thesis, 2019

- Asif Sattar, Human detection and distance estimation with monocular camera using YOLOv3 neural network [Inimeste tuvastamine ning kauguse hindamine kasutades kaamerat ning YOLOv3 tehisnärvivõrku], MS thesis, 2019

- Ragnar Margus, Kergliiklusvahendite jagamisteenuseks vajaliku positsioneerimismooduli loomine ja uurimine [Develoment and testing of a IoT module for electric vehicle sharing service], MS thesis, 2019

- Pavel Šumejko, Robust Solution for Extrinsic Calibration of a 2D Laser-Rangefinder and a Monocular USB Camera [Meetod 2D laserkaugusmõõdiku ja USB-kaamera väliseks kalibreerimiseks], MS thesis, 2019

- Dzvezdana Arsovska, Building an Efficient and Secure Software Supply Pipeline for Aerial Robotics Application [Efektiivne ja turvaline tarkvaraarendusahel lennurobootika rakenduses], MS thesis, 2019

- Tõnis Tiimus, Visuaalselt esilekutsutud potentsiaalidel põhinev aju-arvuti liides robootika rakendustes [A VEP-based BCI for robotics applications], MS thesis, 2018

- Martin Appo, Hardware-agnostic compliant control ROS package for collaborative industrial manipulators [Riistvarapaindlik ROSi tarkvarapakett tööstuslike robotite mööndlikuks juhtimiseks], MS thesis, 2018

- Hassan Mahmoud Shehawy Elhanash, Optical Tracking of Forearm for Classifying Fingers Poses [Küünarvarre visuaalne jälgimine ennustamaks sõrmede asendeid], MS thesis, 2018

Bachelor's theses

- Kaarel-Richard Kaarelson, TeMoto Action Assistant: A Web-Based Human–Robot Interface for Designing UMRF Graphs [TeMoto Action Assistant: Veebipõhine Inimese ja Roboti Interaktsiooni Tööriist UMRF Graafide Loomiseks], BS thesis, 2025

- Oliver Voorel, Humanoidrobot Semuboti toitesüsteemi uuendamine, BS thesis, 2025

- Karl Rahn, Millimeeterlaine radari integreerimine avatud lähtekoodiga muruniiduki platvormil Open Mower, BS thesis, 2025

- Mattias Mäe, Sõiduki kaugjuhtimise viivituse mõõtmine, BS thesis, 2025

- Jürgen Kottise, Dockeri konteineritel põhinev haldustarkvara Robotont 3 õpperobotile, BS thesis, 2025

- Martin Kaur, Servodel põhinev sotsiaalse robotkäe süsteem SemuBotile, BS thesis, 2025

- Karl-Jürgen Siilak, Mass Portal Grand Pharaoh XD 3D-printeri uuendamine, BS thesis, 2025

- Usman Ali Khan, Graphical programming interface for ROBOTONT, an open-source educational robot, BS thesis, 2025

- Robina Zvirgzdina, Development of an Autonomous Open-Source Inventory Performance Robot for the University of Tartu Library, BS thesis, 2025

- Omar Huseynli, ROS integration for the Semubot robot, BS thesis, 2025

- Albert Unn, Suhtlusvõimekuse arendamine sotsiaalsele humanoidrobotile SemuBot [Developing the ability to communicate for SemuBot, a social humanoid robot], BS thesis, 2024

- Veronika-Marina Volynets, Development of Control Electronics and Program for Robotont's Height Adjustment Mechanism [Juhtelektroonika ja programmi väljatöötamine Robotondi kõrguse reguleerimise mehhanismile], BS thesis, 2024

- Nikita Kurenkov, Flexible Screen Integration and Development of Neck Movement Mechanism for Social Humanoid Robot SemuBot [Humanoidroboti näo lahenduse leidmine ja rakendamine; humanoidroboti jaoks kaela mehhanismi väljatöötamine], BS thesis, 2024

- Elchin Huseynov, Design and Control of a Social Humanoid Robot - SemuBot’s Hand [Sotsiaalse Humanoidroboti Disain ja Juhtimine - SemuBoti Käsi], BS thesis, 2024

- Veronika Podliesnova, Real-Time Detection of Robot Failures by Monitoring Operator’s Brain Activity with EEG-based Brain-Computer Interface [Reaalajas Robotirikke Tuvastamine Operaatori Ajutegevuse Jälgimise teel EEG-põhise Aju-Arvuti Liidese abil], BS thesis, 2024

- Märten Josh Peedimaa, Rhea: An Open-Source Table Tennis Ball Launcher Robot for Multiball Training [Avatud lähtekoodiga lauatennise palliviske robot mitmikpall treenimiseks], BS thesis, 2024

- Karl Sander Vinkel, Laadimisjaama ja transportkesta väljatöötamine õpperobotile Robotont [Development of charging dock and transport case for Robotont], BS thesis, 2024

- Raimo Köidam, Valguslahenduse tarkvara väljatöötamine õpperobotile Robotont [Development of light solution software for the educational robot Robotont], BS thesis, 2024

- Robert Valge, Toitepinge ja tarbevoolu monitoorimine ning toitehalduse püsivara loomine õpperobotil Robotont [Monitoring supply voltage and current consumption and creating firmware for Robotont’s power management system], BS thesis, 2024

- Leonid Tšigrinski, Õpperoboti Robotont püsivara arhitektuuri uuendamine [Education robot "Robotont" firmware architecture updating], BS thesis, 2024

- Andres Sakk, Avatud robotplatvormi Robotont 3 kasutajaliidese väljatöötamine [Development of a user interface for the open robotics platform Robotont 3], BS thesis, 2024

- Kaur Kullamäe, Elektroonikalahendus ja püsivara robotkäe juhtimiseks sotsiaalsel humanoidrobotil SemuBot [Building the SemuBot arm electronics system], BS thesis, 2024

- Kristjan Madis Kask, Käe mehaanika disain sotsiaalsele humanoidrobotile SemuBot [Arm mechanics design for social humanoid robot SemuBot], BS thesis, 2024

- Sven-Ervin Paap, ROS2 draiver õpperobotile Robotont [ROS2 driver for the educational robot Robotont], BS thesis, 2024

- Timur Nizamov, Audio System for the Social Humanoid Robot SemuBot [Helisüsteem sotsiaalsele humanoidrobotile SemuBot], BS thesis, 2024

- Georgs Narbuts, Holonomic Motion Drive System of a Social Humanoid Robot SemuBot [Sotsiaalse humanoidroboti SemuBoti holonoomne liikuv ajamisüsteem], BS thesis, 2024

- Iryna Hurova, Kitting station of the learning factory [Õppetehase komplekteerimisjaam], BS thesis, 2023

- Paola Avalos Conchas, Payload transportation system of a learning factory [Õppetehase kasuliku koorma transpordisüsteem], BS thesis, 2023

- Pille Pärnalaas, Pöördpõik ajami arendus robotplatvormile Robotont [Development of swerve drive for robotic platform Robotont], BS thesis, 2023

- Carl Hjalmar Love Hult, Using and Evaluating the Real-time Spatial Perception System Hydra in Real-world Scenarios [Reaalajas toimiva ruumilise taju süsteemi Hydra kasutamine ja hindamine praktilistes stsenaariumides], BS thesis, 2023

- Priit Rooden, Autonoomse laadimislahenduse väljatöötamine õpperobotile Robotont [Development of an autonomous charging solution for the robot platform Robotont], BS thesis, 2023

- Marko Muro, Robotondi akulahenduse ning 12 V pingeregulaatori prototüüpimine [Prototyping battery solution and 12 V voltage regulator for Robotont], BS thesis, 2023

- Danel Leppenen, Nav2 PYIF: Python-based Motion Planning for ROS 2 Navigation 2 [Nav 2 PYIF: Pythoni põhine liikumise planeerija ROS 2 Navigation 2-le], BS thesis, 2023

- Kertrud Geddily Küüt, Kõrgust reguleeriv mehhanism Robotondile [Height adjusting mechanism for Robotont], BS thesis, 2023

- Ingvar Drikkit, Lisaseadmete võimekuse arendamine haridusrobotile Robotont [Developing add-on device support for the educational robot Robotont], BS thesis, 2023

- Kristo Pool, MoveIt 2 õppematerjalid [Learning materials for MoveIt 2], BS thesis, 2023

- Erki Veeväli, Development of a Continuous Teleoperation System for Urban Road Vehicle [Linnasõiduki pideva kaugjuhtimissüsteemi arendus], BS thesis, 2023

- Aleksandra Doroshenko, Haiglates inimesi juhatava roboti disain [Hospital guide robot design], BS thesis, 2023

- Hui Shi, Expanding the Open-source ROS Software Pack age opencv_apps with Dedicated Blob Detection Functionality [Avatud lähtekoodiga ROS-i tarkvarakimbu opencv_apps laiendamine laigutuvasti funktsioonaalsusega], BS thesis, 2022

- Dāvis Krūmiņš, Web-based learning and software development environment for remote access of ROS robots [Veebipõhine õppe- ja tarkvaraarenduse keskkond ROS robotite juurdepääsuks kaugteel], BS thesis, 2022

- Anna Jakovleva, Roboquiz - an interactive human-robot game [Roboquiz - interaktiivne inimese ja roboti mäng], BS thesis, 2022

- Kristjan Laht, Localization with Fiducial Markers [Roboti lokaliseerimine koordinaatmärkidega], BS thesis, 2022

- Hans Pärtel Pani, ROS draiver pehmerobootika haaratsile [ROS Driver for Soft Robotic Gripper], BS thesis, 2022

- Markus Erik Sügis, Jagatud juhtimise põhimõttel realiseeritud robotite kaugjuhtimissüsteem [A continuous teleoperating system based on shared control concept], BS thesis, 2022

- Taaniel Küla, ROS2 platvormile keevitusroboti tarkvara portotüübi loomine kasutades UR5 robot-manipulaatorit [Welding robot software prototype for ROS2 using UR5 robot arm], BS thesis, 2022

- Rauno Põlluäär, Veebirakendus-põhine kasutajaliides avatud robotplatvormi Robotont juhtimiseks ja haldamiseks [Web application-based user interface for controlling and managing open-source robotics platform Robotont], BS thesis, 2021

- Hendrik Olesk, Nägemisulatuses kaugjuhitava mobiilse robotmanipulaatori kasutajamugavuse tõstmine [Improving the usability of a mobile manipulator robot for line-of-sight remote control], BS thesis, 2021

- Tarvi Tepandi, Segareaalsusel põhinev kasutajaliides mobiilse roboti kaugjuhtimiseks Microsoft HoloLens 2 vahendusel [Mixed-reality user interface for teleoperating mobile robots with Microsoft HoloLens 2], BS thesis, 2021

- Rudolf Põldma, Tartu linna Narva maantee ringristmiku digikaksik [Digital twin for Narva street roundabout in Tartu], BS thesis, 2021

- Kwasi Akuamoah Boateng, Digital Twin of a Teaching and Learning Robotics Lab [Robotite õpetamise ja õppimise labori digitaalne kaksik], BS thesis, 2021

- Karina Sein, Eestikeelse kõnesünteesi võimaldamine robootika arendusplatvormil ROS [Enabling Estonian speech synthesis on the Robot Operating System (ROS)], BS thesis, 2020

- Ranno Mäesepp, Takistuste vältimise lahendus õpperobotile Robotont [Obstacle avoidance solution for educational robot platform Robotont], BS thesis, 2020

- Igor Rybalskii, Gesture Detection Software for Human-Robot Collaboration [Žestituvastus tarkvara inimese ja roboti koostööks], BS thesis, 2020

- Meelis Pihlap, Mitme roboti koostöö funktsionaalsuste väljatöötamine tarkvararaamistikule TeMoto [Multi-robot collaboration functionalities for robot software development framework TeMoto], BS thesis, 2019

- Kaarel Mark, Liitreaalsuse kasutamine tootmisprotsessis asukohtade määramisel [Augmented reality for location determination in manufacturing], BS thesis, 2019

- Kätriin Julle, Roboti KUKA youBot riistvara ja ROS-tarkvara uuendamine [Upgrading robot KUKA youBot’s hardware and ROS-software], BS thesis, 2019

- Georg Astok, Roboti juhtimine virtuaalreaalsuses kasutades ROS-raamistikku [Creating virtual reality user interface using only ROS framework], BS thesis, 2019

- Martin Hallist, Robotipõhine kaugkohalolu käte liigutuste ülekandmiseks [Teleoperation robot for arms motions], BS thesis, 2019

- Ahmed Hassan Helmy Mohamed, Software integration of autonomous robot system for mixing and serving drinks [Jooke valmistava ja serveeriva robotsüsteemi tarkvaralahendus], BS thesis, 2019

- Kristo Allaje, Draiveripakett käte jälgimise seadme Leap Motion™ kontroller kasutamiseks robootika arendusplatvormil ROS [Driver package for using Leap Motion™ controller on the robotics development platform ROS], BS thesis, 2018

- Martin Maidla, Avatud robotiarendusplatvormi Robotont omniliikumise ja odomeetria arendamine [Omnimotion and odometry development for open robot development platform Robotont], BS thesis, 2018

- Raid Vellerind, Avatud robotiarendusplatvormi ROS võimekuse loomine Tartu Ülikooli Robotexi robootikaplatvormile [ROS driver development for the University of Tartu’s Robotex robotics platform], BS thesis, 2017

- Kalle-Gustav Kruus, Jalgpalliroboti löögimehhanismi elektroonikalahendus [Driver circuit for a kicking mechanism of a football robot], BS thesis, 2013

- Sven Kautlenbach, Autonoomne seade elastsusmooduli mõõtmiseks [Electronic device for Young’s modulus measurements], BS thesis, 2011