Lammps

Lammps minimal install on Linux

1. Download Lammps current C++ tarball and extract it.

2. Install either OpenMPI or MPICH2. I found that MPICH2 works slightly better. You will need both the base and development packages for your system. For example:

- yum install mpich2.x86_64

- yum install mpich2-devel.x86_64

Note where the framework has been installed.

3. Set your PATH to point to the MPI binaries. Edit the user's .bash_profile and add the MPI bin directory to the existing PATH modification there. This will take effect on your next session. To add to PATH on your current session, type (use the correct bin directory location)

- export PATH=$PATH:/lib64/mpich2/bin

4. Make sure the system can find the MPI libraries. Open /etc/ld.so.conf. It should either contain a list of library directories or something like

include ld.so.conf.d/*.conf

In the first case, add you MPI library directory, for example:

/lib64/mpich2/lib

In the latter case, go to the folder mentioned in the include and create a new file. Make sure it conforms to the format specified in the include statement. Put your MPI library directory path into that file.

5. Download the minmpi makefile and put into your lammps directory in src/MAKE.

6. Go to the src directory and type (the -j switch uses parallel compilation; remove it if causes problems)

- make -j minmpi

7. If no errors occur, you now have the executable file lmp_minmpi. If the compilation failed because of missing dependencies, you need to specify the location of MPI includes and libraries for lammps. Edit Makefile.minmpi, and make sure that MPI_INC points to the location of mpi.h, MPI_PATH points to the libraries and MPI_LIB links the MPI. For example:

MPI_INC = -I/usr/include/mpich2-x86_64/- MPI_PATH = -L/lib64/mpich2/lib

MPI_LIB = lmpi

Go back to src and type

- make clean-all

- make -j minmpi

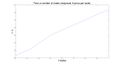

Scaling tests

Lammps calculation time scales linearly with the number of atoms and increasing the number of processors decreases calculation time proportionally. It distributes well among different processor nodes. Simulations were run with a timestep of 1 ps for 10 000 timesteps.